Much has been written about the impending artificial intelligence singularity — the point at which machine intelligence outstrips humankind’s, promptly enslaves it, and potentially causes the universe to collapse. Some people think the bot takeover is not far off.

But what do we do in the meantime? Well, given the danger, we should keep a close eye on these bots…by carefully instrumenting them via testing, monitoring, and analytics 🙂 Together, we refer to this instrumentation as diagnostics. And, interestingly, we are seeing a convergence here as well. It has far less apocalyptic implications, but it does have many practical ones. What are they and what is driving them?

Why Diagnostics?

Diagnostics is a broad term, and an overloaded one too. We use it to mean the entirety of activities to ensure that a system is behaving correctly. This spans backend considerations through to user experience—everything from detecting security anomalies to discovering why users are dropping off at a particular point in the UI.

Why bother with such a broad term? Because much of the existing categorization is not driven by who needs the data—instead it is driven by where the data comes from. For example, in a modern web app, UX-related data comes from JavaScript-based instrumentation tools like Google Analytics, while systematic errors come from backend servers, potentially leveraging new-fangled technologies like Docker, but still relying on nearly ancient Unix tools, like syslog, for capturing machine data.

The singularity term applies here because the distance between these data capture points has collapsed with new AI-based approaches. Our traditionally separate, though perhaps parallel, lines of data are now integrated as one. Stand back! Don’t cross the streams.

What’s Different With Alexa Skills and Bots?

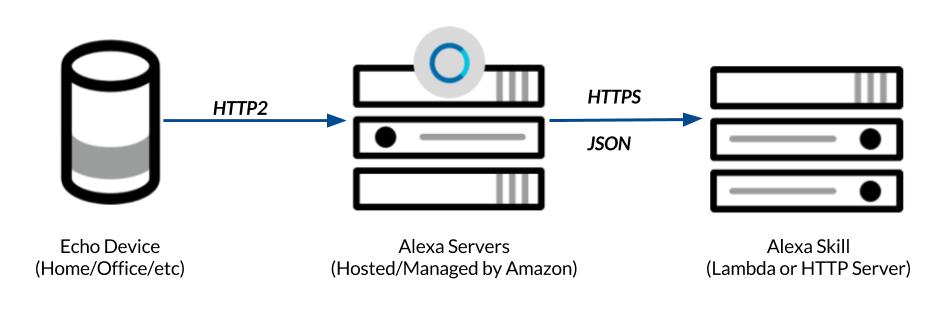

We previously discussed how the Alexa programming model, and Webhook-driven development in general, is different (and better).

A quick refresher:

The key takeaway: Bots are not running on the device. Instead, they are running somewhere in the cloud on a bot maker’s own computing resources. The API is no longer an API in the way developers are normally accustomed to. Instead, it is simple request/response JSON payloads that are passed back and forth between platforms like Alexa or Google Assistant and the bots they enable.

What is the implication? With bots, the whole of the user and technical interfaces are captured within a single sequence of payloads, directed at a single point of entry managed by the bot developer. Thus, if we properly instrument our Alexa Skills and Google Actions, it means we can use this concentration of API, UX, and data to accomplish nearly everything across our diagnostic categories.

Why This Is Good News For Diagnostics

By consolidating our data, we have an ability to look more holistically at system behavior. To consider the total experience of users across every dimension of app performance. This is a novel opportunity. The nature of Alexa skill and bot development even allows us to take our diagnostics a step further. Because developers must declare what users can say to the platforms (in the form of defining Intents), we can automate the process of ensuring all these system entry points are working well.

But There Is Some Bad News

Intrinsic to all this is that the AI is taking on more work. This can be positive in many ways. For example, the developer has a lighter workload to accomplish the same UX task. Tools like Dialogs for Alexa and Slot-Filling for API. AI show how the AI platforms can simplify a developer’s life.

However, the more control developers cede to the AI, the more they become vulnerable to its intrinsic inconsistencies. Fundamental to machine-learning is that the underlying platforms are evolving. As the AI evolves, how an application behaves may change — and not always for the better. Even if the AI were always getting smarter, unpredictability by itself can be a problem. It’s not a Terminator/SkyNet-scary type of scenario, but it is a barrier to delivering reliable, consistent user experiences.

This supports our belief that is critical to have thorough monitoring tools in place to ensure reliable bot behavior.

Who Cares About This Stuff?

| OpsLand | UserLand | |

|---|---|---|

| Developer | X | X |

| DevOps | X | |

| Product | X | X |

| Marketing | X |

We use the terms OpsLand and UserLand to define the audiences for consuming this data. OpsLand encompasses tools related to machine data (response times, exceptions, debugging information), while UserLand refers to data on how the user is interacting (what did they say? where did they drop off?).

Different audiences care about different things. Marketing is not in favor of bug-ridden apps, but they are not typically going to be able to make sense of detailed log messages. By the same token, DevOps does not want a poor UX, but from where they sit, they are not able to easily quantify what that means.

What’s changed is that everyone can now see everything. The person responsible for each consideration may be different, but there is an opportunity to get away from the existing silos people live in. Of course much of this is looking ahead a bit. Probably 50% or more of all bots available today have a single person behind them wearing all four hats. And so the diagnostics convergence is all the better for them 🙂

What Has Not Changed

When contemplating our post-singularity future, we know that a lot of the same considerations will still apply. Namely, developers of great Alexa skills and bots will take the care to ensure:

- Consistent behavior

- Uptime

- Responsiveness

- API validity/conformity

- Rapid diagnosis

- User experience quality

And perhaps most important, * All of these elements happening in a way that you don’t have to think about.

The way we accommodate these concerns is changing. But our goals are still essentially the same. Future articles will expand further on the implications of this, as well as what we are doing at Bespoken to make certain there’s a bright future of well-built, well-behaved bots. Right up until the moment they takeover!

We are devoted to monitoring and diagnostics for bots. Try out our software here bespoken.io/dashboard.