Automated Testing,

Training and Monitoring

for Chatbots

And though AI has improved by leaps and bounds in recent years, it still requires constant attention to work well. That is where Bespoken can help out.

We provide a full-cycle program for managing AI/NLU-based systems:

iteratively, and continuously.

Gather

Real-world and Artificially-Generated Text and Speech Interactions, Across Accents, Dialects, and Background Environments.

Test

Measure the performance of the system, identify problem areas, and suggest revisions.

Train

Tune ASR and NLU Models to improve performance. Adjust model parameters, add training data, and fix configuration issues.

Monitor

Constantly track what is happening with the live system issues.

Crowd-Sourced User Testing

We assist our customers with initial utterance gathering using our Device Service in conjunction with crowd-sourced task testing providers such as Amazon Mechanical Turk and Applause. Our team will gather input from real users to assist with:

- Functional testing: Ensure the application works correctly with real users.

- Utterance gathering: Acquiring a complete picture of what real users will say and how they will say it.

- Usability Evaluation: Validate the design and UX of your application via objective and subjective feedback from actual users.

The output of our crowd-sourced testing is then used as the basis for creating a comprehensive automated testing regimen.

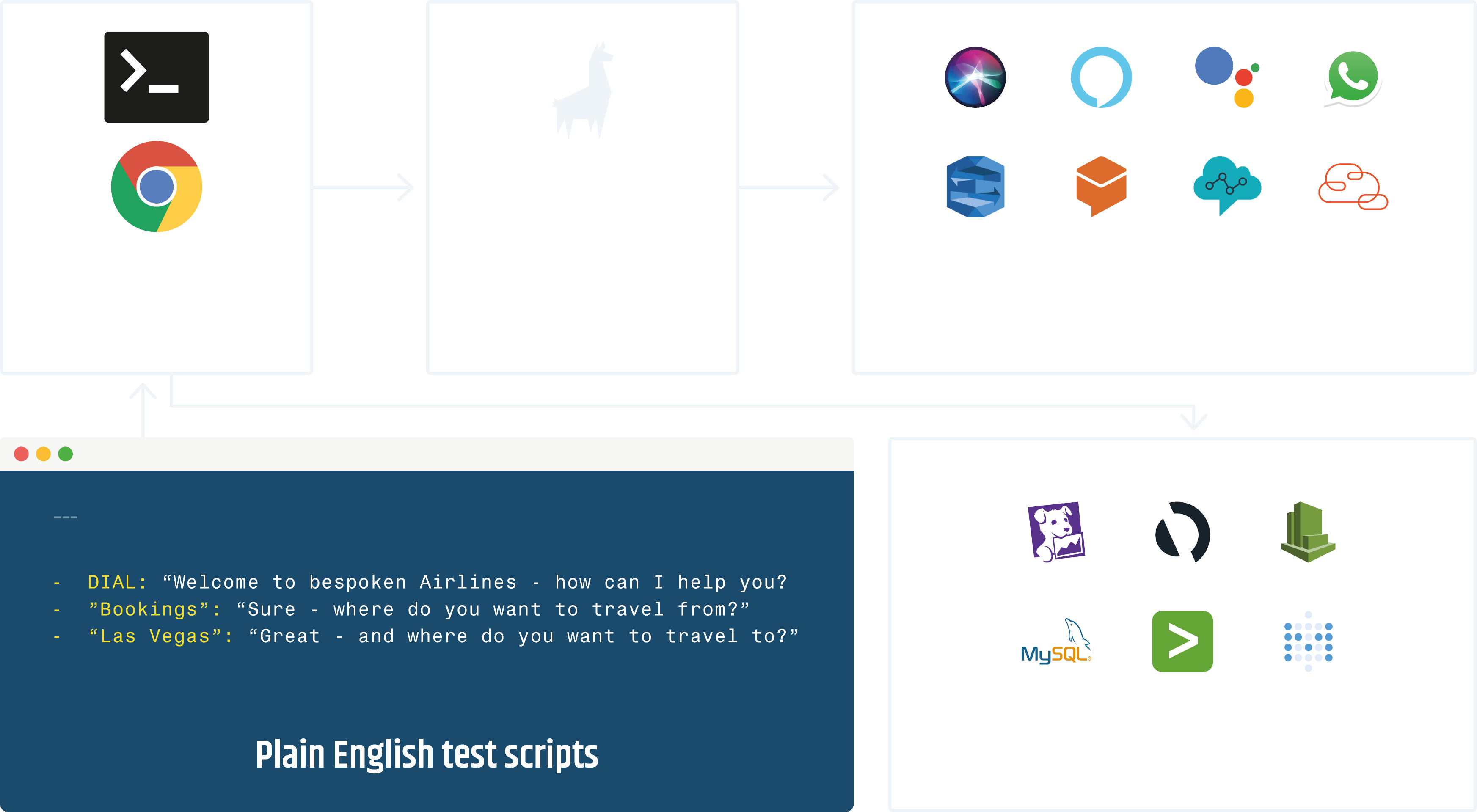

Automated Testing

Our automated testing assists across several key concerns for chat-based systems:

- Functional Testing: ensuring the system works correctly and is bug-free, automatically and repeatably.

- Monitoring: we run tests on a routine basis to ensure everything is working well in your system. If there are issues, we let you know right away.

- Accuracy Testing: we measure performance to ensure that users are consistently understood across every utterance. When they are not understood correctly, we make specific recommendations.

All of this is driven off our unified API for conversation:

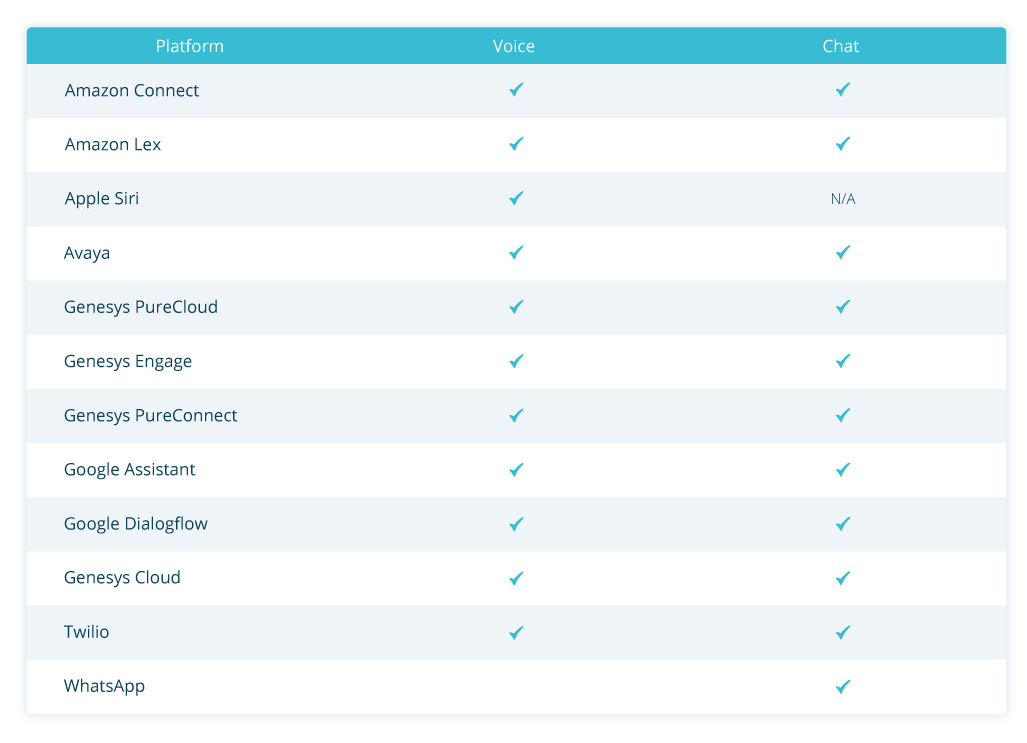

We support testing Conversational AI via the following platforms and channels:

HOW IT WORKS

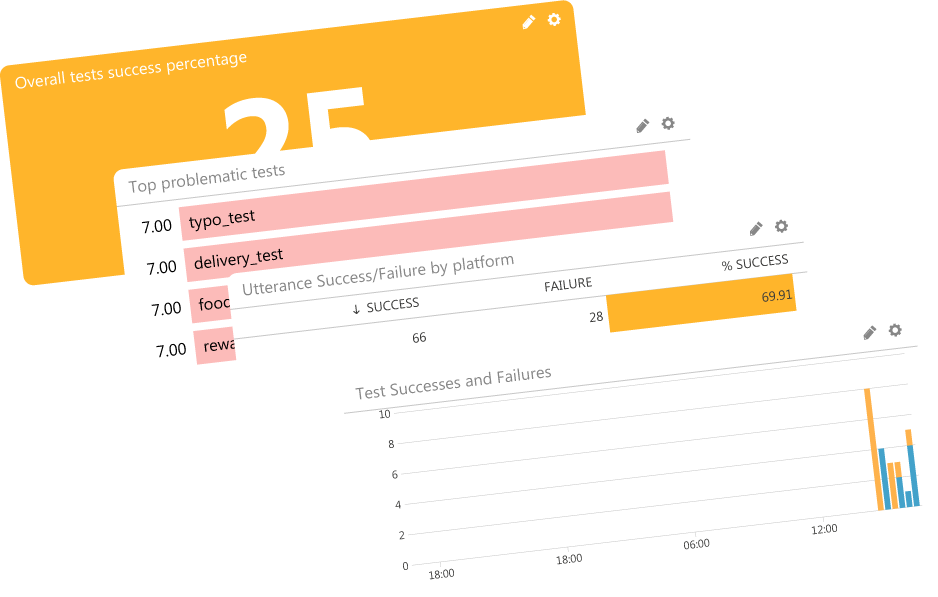

Ongoing Reporting

and Alerting

Beyond the individual results for each test run, we also provide reporting on what is happening over time. Take a look here: