We were excited to see Amazon has made audio streaming on Alexa available. We had been anticipating it for some time, so we could not wait to get our hands on it!

We’ve already seen for some time “built-in” skills like Spotify, Amazon Prime Music and iHeartRadio on Alexa – this new offering makes it possible for developers and content publishers to make their own continuous listening experiences available.

This, in concert with Alexa’s existing conversational capabilities, makes it an even more compelling platform.

The power of the new API does, though, come with some complexity and challenges. Our Bespoken tools (aka bst) can help. With them, we aim to make development frictionless – just launch our bst proxy and your laptop will be tied directly into the Alexa services’ requests and responses. You can develop and test without being slowed down by deployments, server restarts or the need to dig through remote logs to find that one key debug statement.

To illustrate all this, we put together a Development Diary.

Overview

Audio Streaming on Alexa is largely accomplished via the AudioPlayer interface. API implementers now can work with:

+ New Builtin Intents (such as PauseIntent and RepeatIntent)

These tie off to voice requests made by the Echo or Alexa device user.

+ New “event-like” requests such as PlaybackNearlyFinished and PlaybackStopped

These correspond to events on the Alexa device and provide information about the state of audio playback on the device. They do not require a response, but give the skill developer a chance to manage their view of the state of playback.

For example, when PlaybackStopped is received, the Skill can store off where it was in playback so it can be resumed at the right point.

And events like PlaybackNearlyFinished allow for “Just In Time” actions, like queueing up the next track to be played.

+ New responses, termed directives, that instruct the device what to do next

Example directives are AudioPlayer.Play and AudioPlayer.Stop. They are self-explanatory – allowing your skill to tell the player to start and stop.

It is also worth noting the PlaybackController interface. We won’t spend much time on it, but keep in mind it is distinct from the AudioPlayer interface – it is for managing interactions driven by button presses (for those devices, like the Amazon Tap, that have buttons).

Following Along

To play around with this ourselves, we made a fork of Amazon’s excellent sample project. Our forked version is available here. If you want to follow along at home, make a run through our Quick Setup instructions.

The New Interaction Model

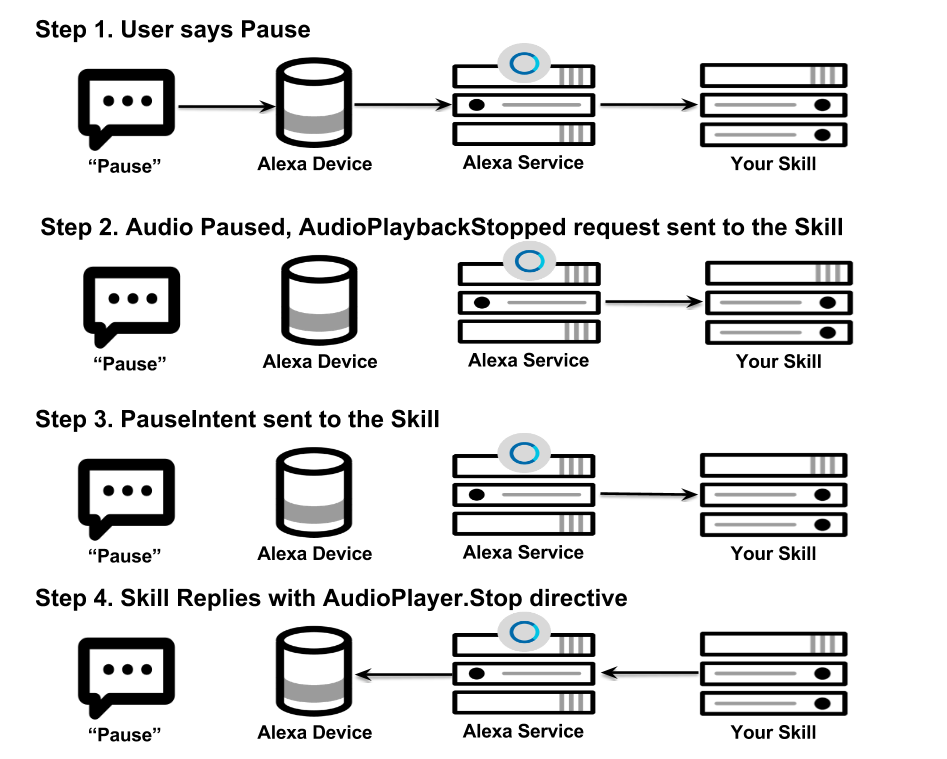

Let’s look at a simple case, such as an Echo listener saying pause. What happens?

As you can see, there is quite a bit going on with even a simple Pause intent. Let’s look at the actual sequence of payloads:

Step 1 – User Says Pause

This is a logical step – no payloads (at least for the Alexa skill) to see here 😉

Step 2 – Alexa Service Sends AudioPlayer.AudioPlaybackStopped Request

{

"type": "AudioPlayer.PlaybackStopped",

"requestId":"amzn1.echo-api.request.53d6c432-9acd-4e1a-abd6-e5ecf092ac86",

"timestamp":"2016-08-31T22:56:40Z",

"locale":"en-US",

"token":"1",

"offsetInMilliseconds":84871

}

This is a simple one, but it is perhaps slightly surprising it comes before the PauseIntent.

Our example skill provides a response, but none is necessary – this request is for us to manage our internal state.

We do this by writing some information to Dynamo and then sending back a simple acknowledgement.

Step 3 – Alexa Service Sends Builtin AMAZON.PauseIntent Request

{

"type":"IntentRequest",

"requestId":"amzn1.echo-api.request.22dbdc3b-d38d-48fc-a472-8f7a749fae9e",

"timestamp":"2016-08-31T22:56:41Z",

"locale":"en-US",

"intent":{

"name":"AMAZON.PauseIntent"

}

}

This looks like what we would expect. Our response, in Step 4, is slightly more interesting.

Step 4 – Alexa Skill Responds With AudioPlayer.Stop Directive

{

"version":"1.0",

"response":{

"shouldEndSession":true,

"directives":[

{

"type":"AudioPlayer.Stop"

}

]

},

...

}

What if we did not send this?

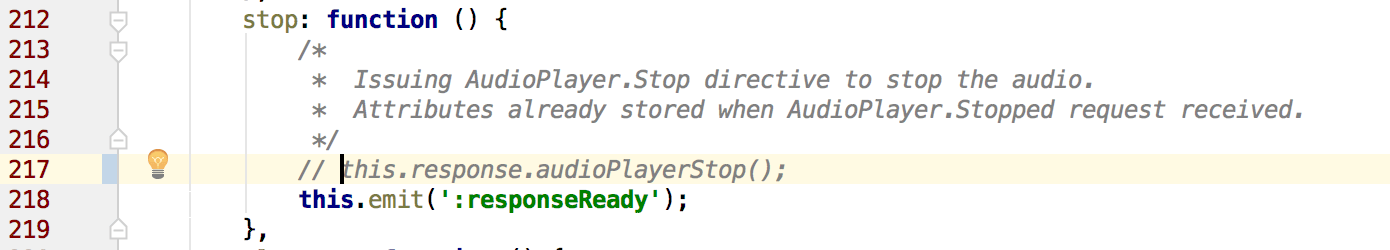

Let’s give it a try – go ahead and comment out this line of code in the project:

With that line commented, try pausing the playback. (NB – with bst proxy no need to restart your skill or deploy a new version – just comment and it’s live!)

The audio magically comes back to life, and guess what pops across our console? An AudioPlayer.PlaybackStarted request!

{

"type":"AudioPlayer.PlaybackStarted",

"requestId":"amzn1.echo-api.request.820ad6ae-aadd-437c-be89-eeedd413f783",

"timestamp":"2016-08-31T23:24:25Z",

"locale":"en-US",

"token":"1",

"offsetInMilliseconds":96038

}

What have we learned so far?

– The new Alexa Audio Streaming features are powerful and awesome

– There are a number of new requests and responses to be handled

– Having direct access to the actual payloads is a critical development aid as we learn its nuances and rapidly iterate our code in response

What’s Next

Look for a follow-up to this blog – we’ll walk through more of our observations on the Audio Streaming API as well as go into more detail on development tricks (including hooking up your live Alexa code directly to a debugger).

We are also working on more functionality so make sure you stay connected either through GitHub or Twitter.