Welcome to the second part of our Conversational AI series, where we delve into the essential aspects of creating high-performing conversational AI applications. In our previous blog post, we discussed the importance of planning and research in the development of Conversational AI. In this post, we will be focusing on modeling – the management of AI components in your conversational application. The models used for speech recognition, natural language understanding, natural language generation, and text-to-speech processing play a critical role in the accuracy and effectiveness of your application. We will be sharing the recommended high-level process for managing these models, including data collection, performance measurement, training, and monitoring. Let’s get started!

Model

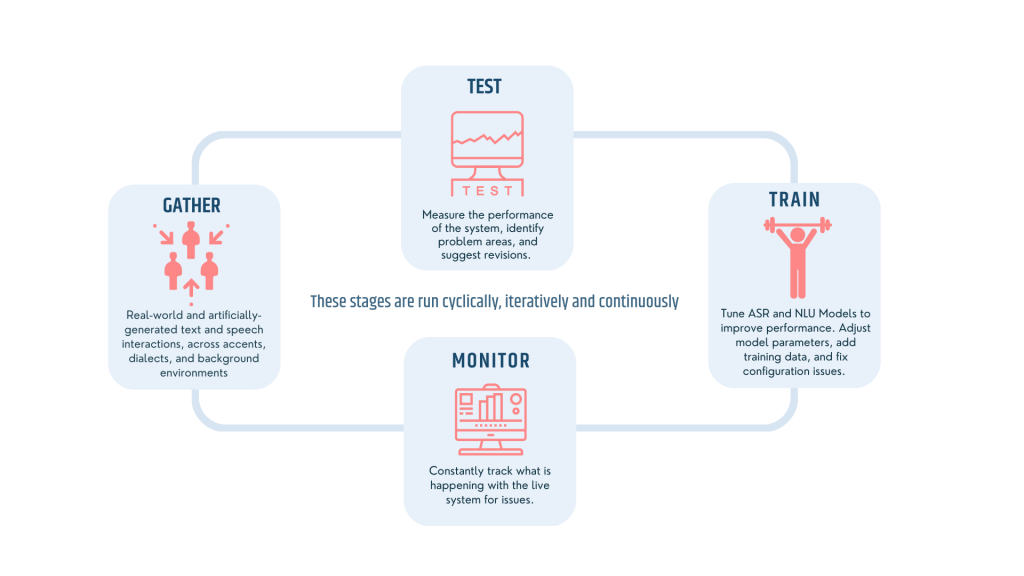

Modeling is about managing the AI components of your conversational application. An AI-based application typically uses models for speech recognition as well as natural language understanding. Other models may be leveraged for natural language generation and text-to-speech processing. It is essential for all of these models to have in place processes to assess their accuracy and improve it over time. The diagram below depicts the high-level process we recommend for managing this:

Each stage is described below – additionally, you can read in more depth on modeling works in our in-depth building block here.

Collect

Data collection is typically the first step for managing a model, and over time, it is perhaps the most essential aspect of it. For many systems, they will only be as good as the data used to train the models on which they rely on.

Initial data collection will start simple, often coming directly from the development team or beta users. But over time, this data set can grow vastly, and the management can become cumbersome and time intensive. Additionally, the data may come from a myriad of sources, such as crowd-sourced data vendors and production systems. Though this may sound daunting, there is no magic bullet to make this easy as of yet, and given how critical data is to AI-based models, this process deserves the care and attention it demands.

Measure

Measurement is about testing the model to see how well it is performing. Depending on the nature of the application and the tools being used, we see huge variations in performance. Defining what is acceptable for your application is also very use-case specific.

What is essential, though, is that:

- Acceptance criteria are defined

- Baseline measurements are taken

- Optimization plans/strategies are defined and acted upon

Many systems we work with start off at accuracy levels below what is deemed initially acceptable. However, with careful tuning and training, they can be improved substantially, via enhancements to the model, taking advantage of tools and settings available from vendors, as well as leveraging alternative vendors where needed.

Train

Training involves adjusting the settings of the model one is working with, as well as adding and removing data from the set used to train it. As the model is trained based on issues identified as part of the measurement and testing, it is critical to re-test and re-assess performance to ensure that:

- The changes are improving/ameliorating the issues identified

- The changes are not resulting in side effects that cause other parts of the model to deteriorate

Based on training the model, once it is deemed to be at an acceptable performance level, it then can be released to see how it works with real users.

Monitor

Once released, it should be carefully monitored, as discussed below. Based on the analytics from the monitoring, the lifecycle should repeat with additional data collected, and the model measured and trained based on this data, followed by subsequent release and monitoring. This cycle is essential to working in Conversational AI, and to achieve top performance, must be repeated continuously while the application is in use.

Managing models is a critical component of creating high-performing conversational AI applications. By following the steps outlined in this guide – collecting data, measuring performance, training models, and monitoring performance – you can ensure that your application is accurate, effective, and meets the needs of your users. Whether you’re just starting out with conversational AI or looking to optimize your existing applications, effective model management is key. So why not start implementing these strategies today and see the impact they can have on your AI applications? Learn more about the Conversational AI guide or get started with Bespoken now!