The Amazon Echo, powered by the Alexa Voice Assistant, provides an exciting new interface for users. Now with Google jumping into the fray, it is only building momentum for Voice-First experiences.

And the innovation goes beyond just Voice – it has also popularized a new programming model. A model that may be as exciting for developers as Voice is for users.

The Amazon Echo, powered by the Alexa Voice Assistant, provides an exciting new interface for users. Now with Google jumping into the fray, it is only building momentum for Voice-First experiences.

And the innovation goes beyond just Voice – it has also popularized a new programming model. The Alexa model may be as exciting for developers as Voice is for users.

What is different about it?

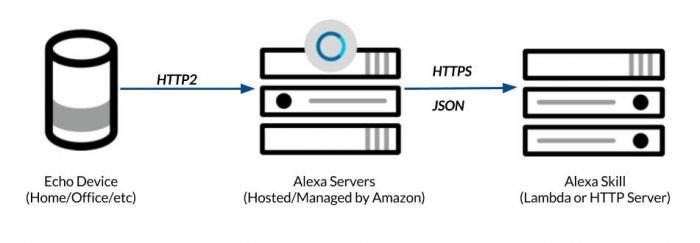

Fundamentally, Alexa is built on Webhooks. They are illustrated below:

Rather than being installed directly on a device, an Alexa app – called a Skill – is installed on a server. It listens for events from the Amazon Alexa service, and responds when they arrive. The interaction is all done using HTTPS and JSON, the backbone of modern web services.

And it’s not just Alexa Skills that are built this way – this is the fundamental architecture for the majority of the Bot platforms – including Google Actions, Facebook Messenger, MS Bot Builder, Telegram, Kik, Slack, and others. Webhooks are the bot programming model.

Why Is The Alexa Model Better?

1. No installation

When building mobile apps, or even web apps, the combinations of devices, operating system versions, and device configurations that need to be supported are effectively endless. On mobile, no one really knows if their app is running correctly for all the places they are installed – it’s simply impractical do that much testing. And this leaves aside having to support both iOS and Android, which is a whole extra layer of headache and complexity.

With Webhooks, the app runs in one place – your environment. It could be a Serverless AWS Lambda. Or it could be a server running in your data center. It may be written in Python, JavaScript, Java or .NET. It might be written in Assembly language for all Alexa or the user cares. The bottom line is the developer has total control over every aspect of the environment. The number of configuration scenarios goes from practically infinite to one.

2. Smaller Surface Area

It is early days for Alexa, and Bots in general. And the interfaces will grow more complex in time.

But the iOS API, as of iOS version 9, has over 13,000 elements. That is an astounding amount of functionality. It’s also an overly-generous length of rope with which the developer can hang himself and his entire team. Not to mention it’s common for iOS and Android to undercut large swaths of their development community with a single OS update, either by explicit API changes or unintended side-effects from bugs or enhancements.

The API complexity level is simply much lower with the Alexa model, which by my count has less than 100 elements between its request and response models. This certainly means less functionality, but it also means for the programmer and the user much less can go wrong.

3. Easy Updates

Want to provide new functionality or fix a critical bug? No need to go through an elaborate update process to get that new code onto users’ devices. Instead, just re-deploy. If you are using AWS Lambdas for your skill, you can just type and hit save. This is not recommended for major production implementations, but it illustrates the simplicity and immediacy of making updates. Developers do not need to live in fear of a bug blowing half their user-base out of the water for days or weeks while they wait for a re-certification.

What Does It Mean?

Programming Alexa can seem so easy, it even makes it easy to overlook what a revolution it represents paradigm-wise. Amazon has built a superior app and experience delivery model. Google has caught on, but no one seems to have told Apple yet. Perhaps a buggy app has delayed delivery of the news 🙂

This model is a boon for developers – there are already more than 8,000 Alexa skills. And the number is likely to only grow faster. I am confident that anyone reading this can build a simple Alexa skill. The same is not true for a mobile app, or even a rich HTML5 web app.

But the tooling for this new model is just starting to catch up. As easy as it is to get started, building great, high-quality experiences still requires good development practices. That’s why we built the Bespoken Tools. They allow for:

- Easy local debugging and testing

- Monitoring

- Even automated unit and end-to-end testing

Our aim is to make the development experience even better, and by extension, the user’s experience as well. Alexa, and Webhooks, open up whole new avenues for innovation. As users and developers, we are excited to see where they take us.

nice very good news ,thanks

I totally agree that Apple is moving much slower because of its process, but they really do focus on bringing the same experience for their customers on all the supported products.

And unfortunately I can agree with your statement that the deployment process with Alexa and Google are good, because if they would be as good as you have mentioned, your tool would not be as popular 🙂 It is way to easy to deploy a buggy code and even their voice recognition, especially google, is changing on weekly basis which is bringing whole other level of complexity and difficulties of bringing great and stable products to the voice market.