I read yesterday’s blog post from Amazon on developing Alexa skills locally with great interest, as accelerating Alexa Skill development is a focus for us.

The practices they outline are very useful. I did want to quickly point out what we are doing with our Bespoken Tools, and how we think we can even further improve and streamline the skill development experience.

The approaches are not at all mutually exclusive, so our goal is to inform people about the tools we provide. By understanding all the capabilities available, developers can make the best decision on how to setup their environment.

Running Lambdas Locally As Local Services

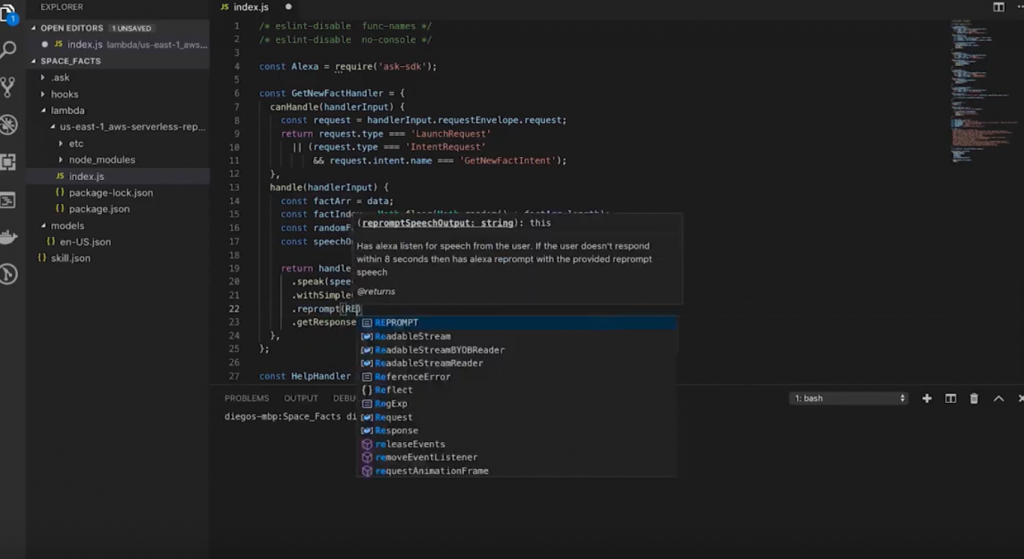

In the blog, it describes under “Add The Code” essentially how to turn an AWS Lambda into a runnable local service.

Conveniently, and without any cutting or pasting, our bst proxy does the same thing 🙂

Once our Bespoken CLI is installed, just enter:

$ bst proxy lambda <LAMDBA_FILE>

Et voila! Your lambda will be running as an HTTP service at:

http://localhost:10000

(Port is configurable, but by default it listens on 10000).

And in addition to the techniques mentioned in the blog post (such as sending JSON payloads directly to it), you can also receive actual requests from Alexa on the local service.

That’s right – the real requests – not just your own locally-crafted JSON, but the actual payload from interacting with an Echo, the Service Simulator, Echosim.io, etc. Whatever device you prefer to test with.

This requires configuring your Alexa skill to look at a special URL (printed out on the command-line when you start the proxy), but once you do, you will be working in what we call beast mode – rampage through dev/test cycles, terrorize bugs and crush out features. Fun, right? 🙂

Testing Intents with the Bespoken CLI

The blog then proceeds to describe testing your local service using JSON files.

This is a great method of testing. But we have another way, which can be even easier to use depending on your setup.

That is our bst speak command.

It uses the local version of your IntentSchema and SampleUtterances to take phrases and turn them into intents, then into JSON, which is then automatically sent to your local service!

So, if you started a proxy as described before, just enter:

$ bst speak Hello World

And we will correctly turn it into a HelloWorldIntent, send it to the service, while showing you both the request and response from the service.

Reference docs for the command are here.

Conclusion

We point these items out to make developers aware of what we think are some pretty neat tools we are building. The goal is to help them build skills faster and better.

We hope Alexa devs will see Bespoken Tools as a great addition to their tool belt.

Give our Bespoken CLI a try and let us know what you think!

The Standard Stuff

Talk to us on Gitter. Stay updated through GitHub.