Welcome back to our blog series on conversational AI applications! In our previous post, we discussed the key considerations for creating a successful conversational experience, including choosing the right platform, designing effective conversational flows, and integrating natural language processing (NLP) and machine learning (ML) technologies. In this post, we will focus on monitoring the performance of your conversational AI application. We will explore several areas of performance monitoring, including user behavior, user feedback, availability, and utterance monitoring. By paying attention to these key metrics, we can identify areas where our application can be improved and ensure that our conversational AI is providing a seamless and effective experience for users. Let’s dive in and learn more about monitoring the performance of your conversational AI application.

We are monitoring several areas of performance of the system:

- User behavior – how users are actually interacting with the system

- User feedback – across other channels, whether customer support, email, bug reporting systems, or social media, we want to look for and capture what users are saying about our application

- Availability monitoring – ensuring the system is up and responsive to users

- Utterance monitoring – we capture the data, as fine of detail as possible, around what the actual users are saying. This data can then be leveraged as part of our test set, as well as allow us to identify areas where the system is struggling to understand users.

User Behavior Monitoring

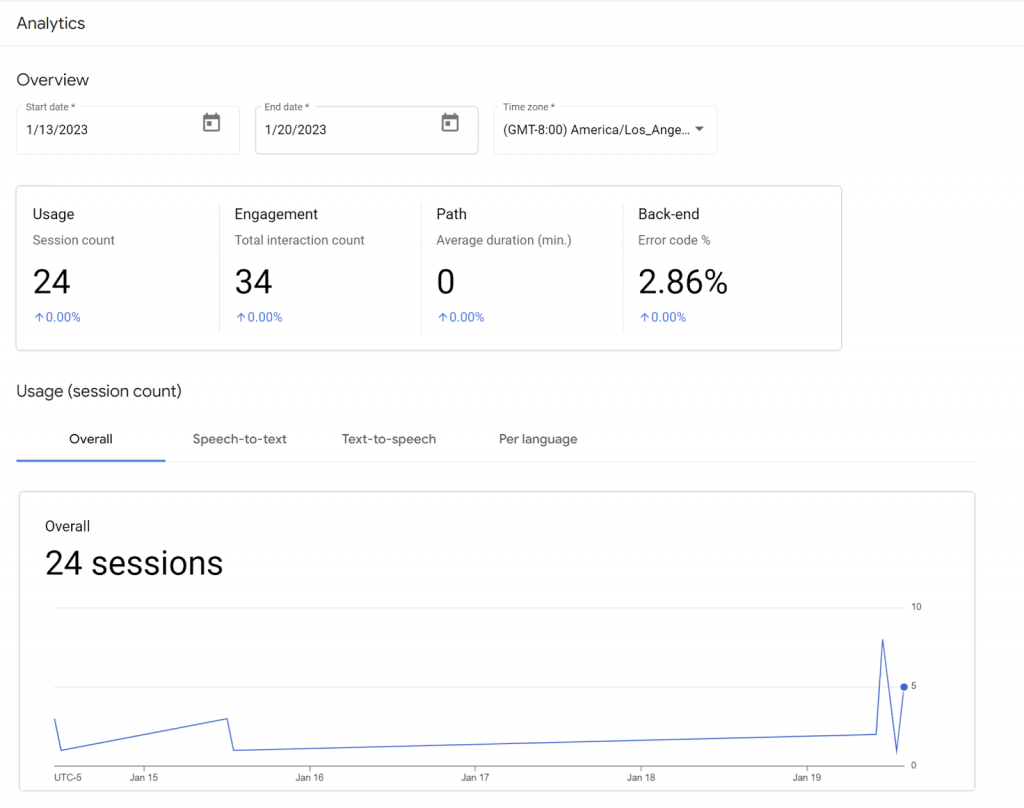

Many are familiar with and have used Google Analytics. This is perhaps the most popular user behavior monitoring system in existence. It is important to put such a system in place as soon as there are users to start looking for answers to these key questions:

- What are users doing most commonly?

- Where do they seem to be getting stuck or dropping off?

- Where is the system inefficient and could be improved?

- What things are users asking the system to do that it cannot? Why?

Here is an example of our system as a usage report looks in Dialogflow:

User Feedback Monitoring

Adjacent to capturing and analyzing user behavior, we want to ensure we are getting data from other channels that might provide valuable feedback on what users like, don’t like, or simply do not understand about the conversational application.

These channels include:

- Customer support

- Social media

- Bug reporting systems – ideally these are available and readily accessible to users

Availability Monitoring

Similar to the two concerns above, this is something that is useful universally to any application, not just a conversational one. We want to make sure:

- The system is up and working

- It is responding to users in a timely fashion (i.e., there is not excessive latency)

- If there is an issue, engineering/operations is notified about it as soon as possible

Commonly, tools such as DataDog, NewRelic, Splunk, and others are leveraged to assist with this. Bespoken provides tools specific to Conversational AI for just this purpose.

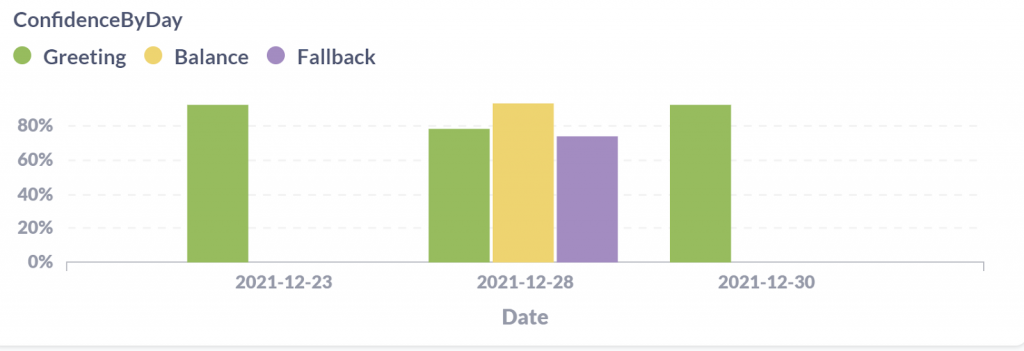

Utterance Monitoring

Utterance monitoring is a unique part of working in Conversational AI. Because it is new, not everyone pays attention to it at first. However, it is critical. It entails capturing all the data related to the user’s interaction, at as granular a level as possible. This data includes:

- Raw audio of what the user actually said (if available/applicable)

- Speech recognition interpretations, with confidence levels, of what the user said

- Natural language interpretations, with confidence levels, of what the user said

- Actual responses from the system

The goal is to have a complete detailed picture of what a user said, how it was interpreted, and what other alternative interpretations were considered. This data can then be leveraged to train and improve the model.

In conclusion, monitoring the performance of your conversational AI application is essential for providing a seamless and effective experience for your users. By capturing data on user behavior, feedback, availability, and utterances, you can identify areas for improvement and make data-driven decisions about how to optimize your application. At Bespoken, we provide tools and solutions specifically designed to help you monitor and optimize your conversational AI application. To learn more about how Bespoken can help you create the best conversational AI experience for your users, visit our website or contact us today to get started!