When we introduced IVR tests into our offering almost a year ago, we were excited to help customers looking for an easy way to test their digital contact centers. Our tools provided a much-needed solution for companies that could no longer get by with just manual testing over a phone line.

Over the last year, working with several cutting-edge companies in the IVR space and helping them improve their systems has been a rewarding experience for us, and it also made us think about ways in which we could improve the accuracy of our tests to achieve better results. You can read our latest case study here to learn more about the type of work we have been doing.

Where we were and why we needed a change

Our first version was focused heavily on not deviating syntax-wise from our existing end-to-end tests for Alexa and Google Assistant. Unlike those systems, where conversations are made “in turns”, a phone call allows for any of the participants to speak at any given time. So what was the best way to simulate that pattern for IVR? Two words: silence detection (or so we thought).

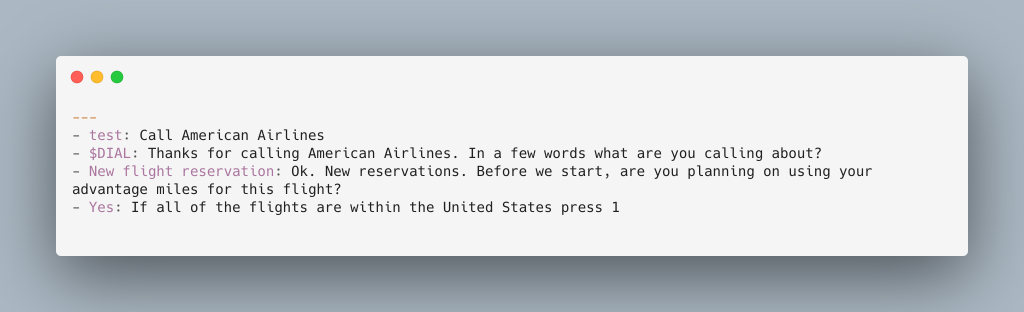

By identifying when an IVR system would “stop talking” for a determined period, we could safely assume that it was now the turn for our tests to start talking and moving the interaction forward. What was said by the IVR system up to that point would be processed by a speech to text service to be compared with our tests expected values as usual. This allowed for succinct tests that, except for a couple of special syntax keywords, would look exactly like the rest of our end-to-end tests. Here’s an example:

The reality is, different systems have different silence periods in which they wait for a response (and they might vary in between interactions), different volume levels in the audio produced, and straight-up different audio quality. Even more, some won’t keep silent but will play background music while waiting for the user to speak instead, or will remain silent while processing data on their own (a user order’s lookup for example) not necessarily expecting the user to say something. All those cases presented challenges to our IVR testing offering as configuration for each system became unique, less user friendly, and hard to standardize.

A step back before moving forward

We decided we should go back to square one and rebuild our approach from a user perspective. Take a look at this sample conversation from a call to American Airlines:

Some key moments can be identified in this sample call:

- Dialing the American Airlines number

- Replying with our intention after the prompt “please, tell me what you’re calling about”

- Repeating our intention if the IVR system does not understand us

- Pressing a number on the phone keypad if necessary

As a caller, it’s clear that I won’t act by saying something or pressing a button on the keypad of my phone unless I’m instructed to by the IVR system, and if the system does not understand me, I will have to repeat my intentions accordingly. With this in mind, we created a couple of new keywords to handle these scenarios, effectively going from a silence detection based approach to an instruction-based one.

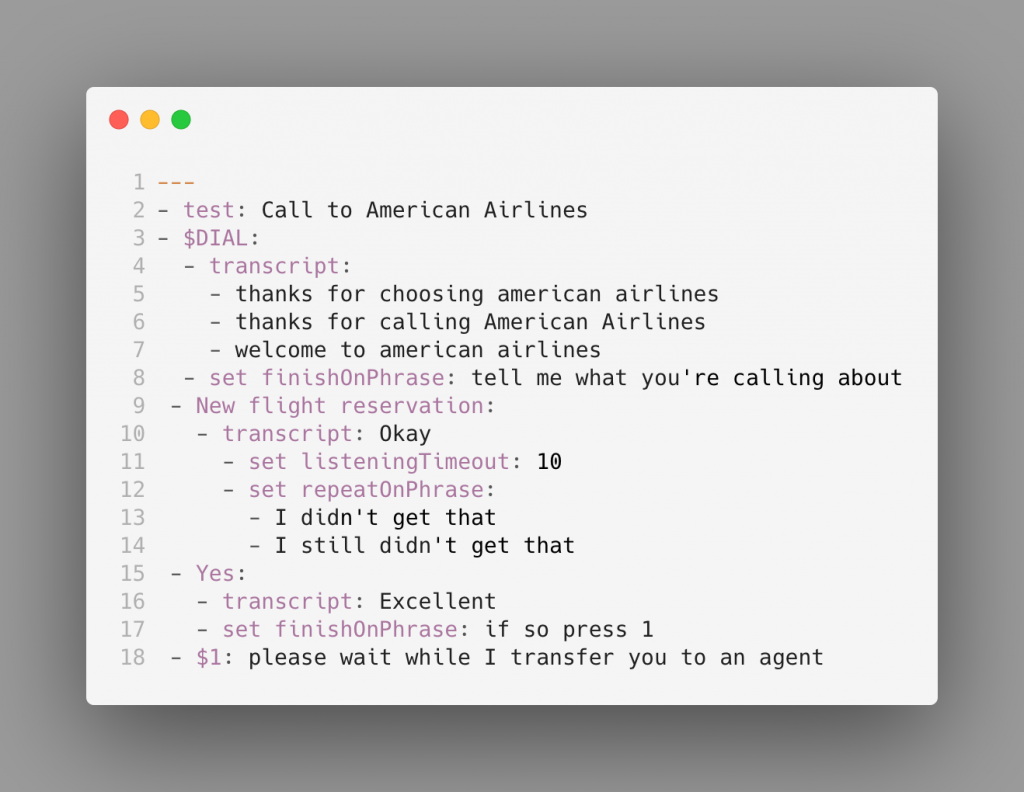

Here’s how the same test looks like now:

And here’s what’s happening under the covers:

- On line 3, we make a call to American Airlines (the phone number is in a separate configuration file)

- Lines 4 to 7 contain all the possible welcome phrases the IVR system can say to us. They are the expected values we are looking for in that interaction.

- Line 8 makes use of a new “set” keyword, used to configure options for the interaction. In this case, “finishOnPhrase” tells our test to move to the next interaction as soon as we’re told: “tell me what you are calling about”.

- Line 9 contains the next interaction “New flight reservation”

- On line 11, if we don’t rely on a finishing phrase for the interaction, we can also tell our tests to go to the next interaction after a number of seconds with the “listeningTimeout” property. In this case 10.

- Line 12 contains sets the phrases that, if heard, will make us repeat “New flight reservation” to the IVR system.

- The following lines keep the conversation going until, on line 18, we press 1 on our keypad. This last interaction does not require a finishOnPhrase or listeningTimeout since it’s the one that ends the call.

Get Started with IVR Automation

If you are a subscribed user of Bespoken looking for IVR test automation get in touch with us to help you set up your first IVR tests. If you are not yet one of our happy customers, apply for a free trial here.

Read more about our IVR tests in our docs here.Take a look at this American Airlines sample project here.