What Is Usability Performance Testing?

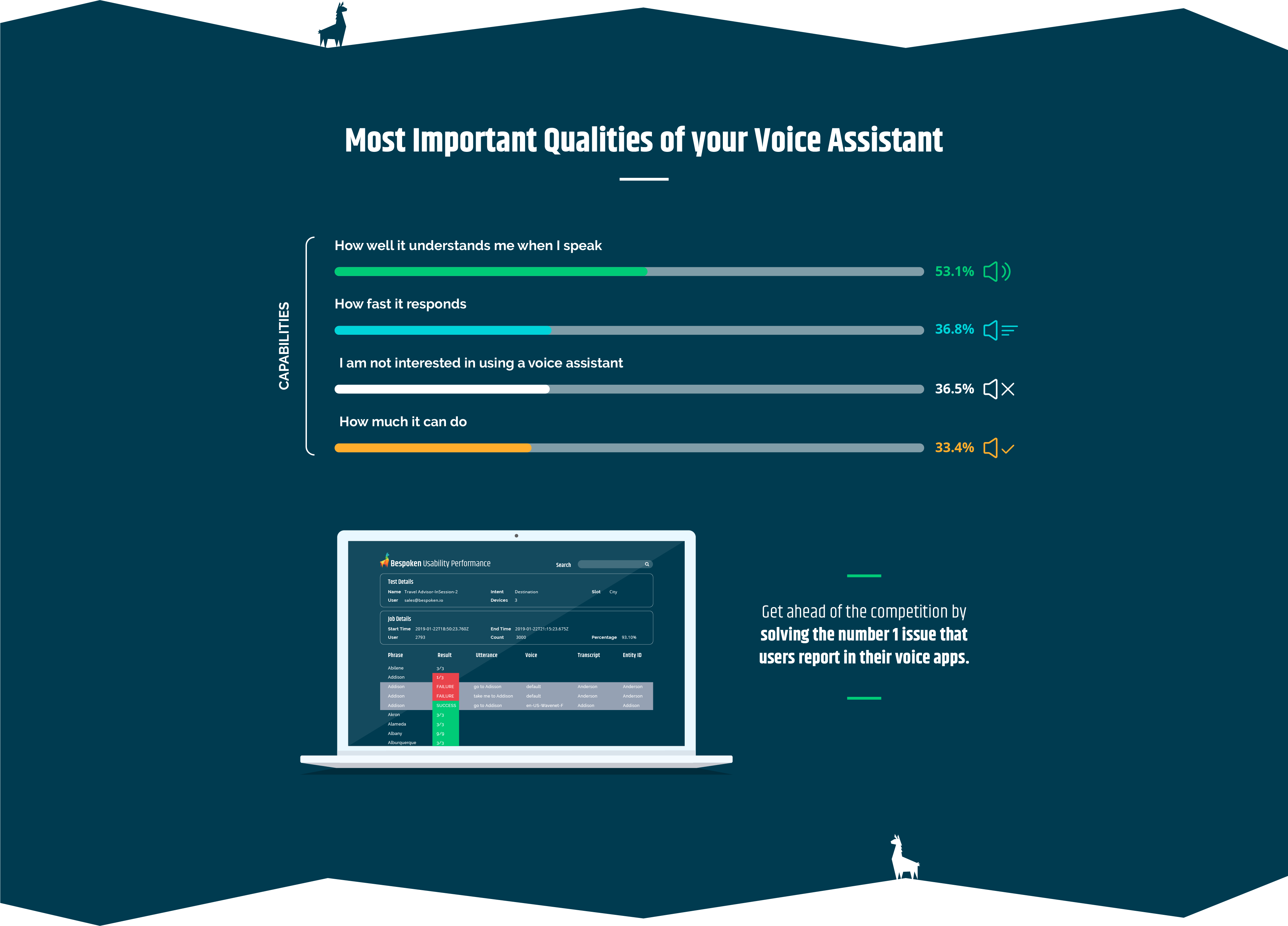

In Bespoken’s recent webinar (slides, video, we officially launched our Usability Performance Testing solution, a product built to solve the toughest problem in voice – ensuring users are understood correctly:

Automated Usability Performance Testing addresses this by enabling comprehensive and repeatable testing of interaction models. We start by capturing baseline metrics on the skill’s performance, then iterate and improve on the interaction model, using the results as a guide.

By leveraging Bespoken’s Usability Performance Testing, skill builders can now ensure users of voice applications have the best experience possible.

Bespoken partnered with Opearlo to work on their popular quiz skill Guess My Name. Opearlo, a Y-Combinator-backed company, makes some of the most popular gaming and productivity skills for Alexa, such as Find My Phone and Animal Rescue (winner of the Alexa Skills Challenge for Kids. Opearlo and Bespoken have collaborated for some time and saw this as a great opportunity to showcase the benefits of Usability Performance Testing to help make a great skill even better.

Guess My Name – A Fun, Simple Skill With Complex Speech Recognition Issues

Guess My Name is an entertaining quiz skill in which the user is given clues as to the identity of a person or thing, and their job is to guess who it is. It’s fun and engaging gameplay has made it extremely popular with users. But with hundreds of possible answers to guess, the speech recognition (ASR) and natural language understanding (NLU) for the skill can be challenging – for Alexa, handling complex, custom slot values is a struggle.

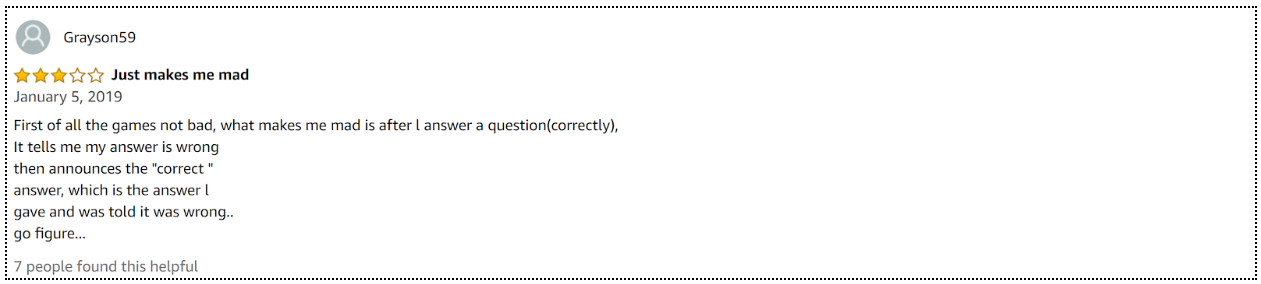

These challenges are reflected in the reviews of the Guess My Name skill – here are some examples of recent reviews:

Oscar Merry, co-founder, and CTO of Opearlo, and Alexa Champion said this on the challenge of tuning an interaction model for Alexa:

There are very few tools available for handling this. What we are typically left with when we make adjustments to our interaction model is submitting a new version of the skill, and then closely monitoring the reviews for issues reported by users. It’s a user-unfriendly and unreliable way to diagnose speech recognition problems.

A Better Way To Diagnose And Fix AI Issues

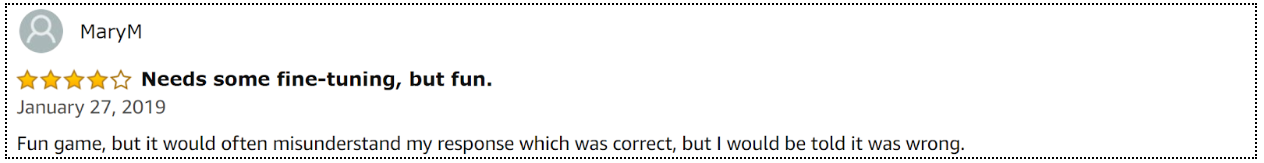

Leveraging our Usability Performance Testing, we ran an initial set of tests against the skill. The tests are easy to set up – we worked with Oscar to identify:

What intents and slots do you want to test?

In this case, the key intent was the one where the user guesses the answer.

What phrases do you want to test?

Coming up with phrase variations is important for testing – for example, when guessing a name, users might say: “You are a llama” or “the answer is llama” or even just “llama”. The phrasing affects how well it is recognized by Alexa.

What type of speakers do you want to test with?

Just as important as phrasing is who the user is – we use text-to-speech from Amazon Polly and Google TTS to create different voices – this allows us to emulate different genders, ages as well as even accents for our speakers.

With this basic information, we were able to get started. Our process for improving the skill was straightforward:

- Run initial tests to get a baseline on performance

- Iterate on the interaction model and the code based on the results

- Run additional tests to see the impact on performance

Using this approach, across a few iterations and a couple of weeks, we were able to greatly improve the skill’s performance.

80% less errors, >99% performance

Our initial tests involved thousands of utterances: hundreds of slot values tested, with a variety of variations across speakers and phrasings. Here is an example of an utterance that was misunderstood:

In this case, we see that although the user has said scarf, it is being misunderstood as “scar”. One easy way to fix this – add “scar” as a synonym for the slot value “scarf”. Many cases can be resolved through this technique as well as other simple heuristics. We will explore the process for triaging and improving the interaction model in-depth in a future post.

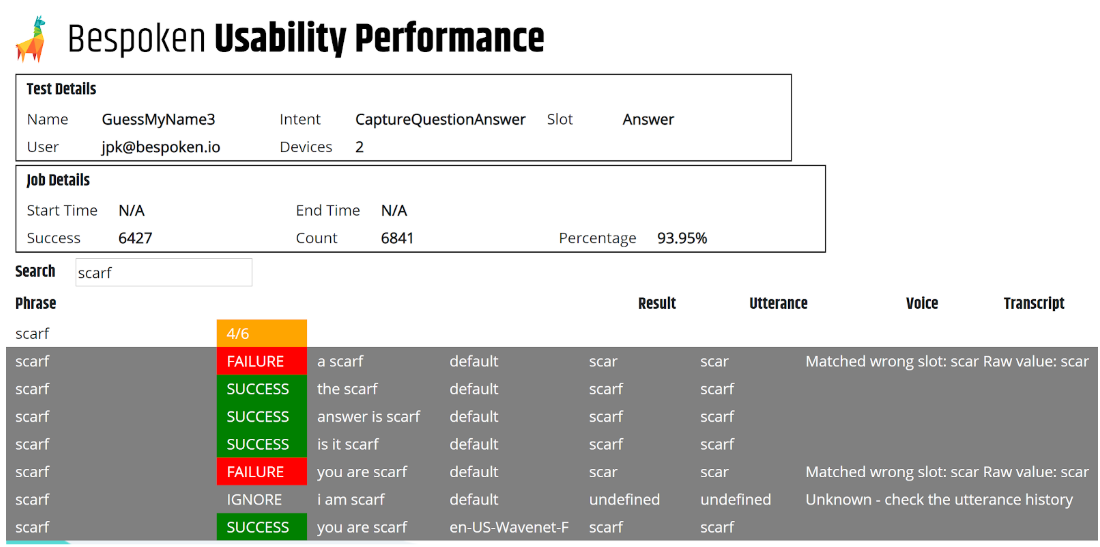

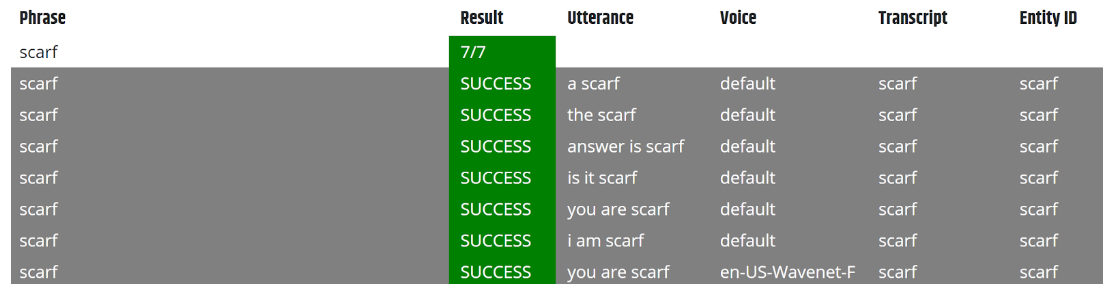

When we re-run our tests after changing our interaction model, here are our results:

Perfect!

Overall, our initial results were okay – about 94% of the time, the speech was correctly understood. This might even sound good (and is perhaps better than many skills that are out there). But if you step back and think about it, it means more than 5% of the time, the user is NOT being understood correctly. That is a very frustrating experience and leads to the bad reviews we showed above. Put it this way – if more than 5% of the time a user clicked a button in your app, it did precisely the wrong thing – how would users feel about it? Would they continue to use the app? And what would they think about the quality of your software? It’s a major usability issue, one that voice developers need to proactively address.

We worked closely with Opearlo to analyze these results, and Oscar and his team made improvements to the interaction model and the code. After a couple more iterations, we were able to get the acceptance rate to >99%. This means less than a 1% error rate – a more than 80% decrease in errors. This had a huge impact on users, which translated to better reviews.

Prior to the changes, we saw more than 26% of the reviews referenced understanding and comprehension issues and the average rating was 4.4 stars. With the new version launched, only a single user has since complained about poor understanding and the average review has been 4.7 stars – that’s a significant benefit for the users of Guess My Name, as well as for Opearlo. Great reviews mean more users, happier users, and more prominent positioning in the skill store. And if you are an agency building skills on behalf of others, it means satisfied customers as well.

“Alexa, how do I get started with Usability Performance Testing?”

Having just launched our Usability Performance Testing product, we’re really excited about the early results we are seeing with our partners.

Is recognizing and understanding your users an issue for you and your team? Now there’s a software solution that can help. Contact us at contact@bespoken.io or get started here. We’ll be happy to get you set up in no time!