Driving Conversational Accuracy Improvement with the Bespoken Platform

About The Customer

Bespoken performed this work on behalf of one of the leading providers of governance, risk and transparency products in the world. Their cybersecurity division is responsible for helping companies mitigate the damage of security incidents, as well as proactively work with affected users to help them understand how they are impacted by security breaches and how to minimize this impact.

“This is the most fruitful and impactful testing and results we’ve ever seen with any vendor. I can’t tell you how blown away the whole team was with the previous results Bespoken showed, it’s like we’re not just reading blog posts and assuming it’s right but actually seeing real data for our particular use case”

— Senior Engineer, IVR/Conversational AI

The Caller Authentication Challenge

Users call the system to better understand how to protect themselves in the case of a cybersecurity incident. Like so many businesses, the first step in the call is to have the IVR verify who the caller is. This authentication process entails verifying the caller’s identity as a registered customer using a combination of techniques. In this case, this includes an alphanumeric identifier unique to the customer, as well as their name, address information, and other key data that is only known by the customer.

Capturing alphanumeric IDs and names might seem like a prosaic task for an Interactive Voice Response (IVR) system, but in fact it is deceptively challenging. To deliver optimal performance and accuracy for users, it requires leveraging multiple speech recognition models, carefully trained for these specific use-cases.

And this use-case is hardly exceptional – the application we detail here covers what is essentially a single customer intent. As systems increase in the scale of their intents and functionality,

The Initial Architecture

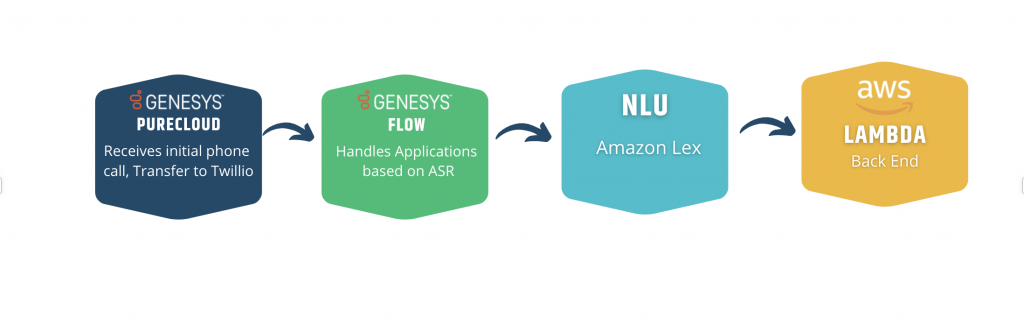

The initial architecture used what is considered to be one of the state-of-art, machine-learning-based Automatic Speech Recognition (ASR) and Natural Language Understanding (NLU) platforms on the market – Amazon Lex. From the outside, this looks like a sound decision, and if one is picking a single vendor for all their intents and applications, it is a credible choice. The decision was made based on generic benchmarks that are publicly available:

The catch is that generic benchmarks do not address the specific use-case of this customer. Once they had built their bot, with Amazon Lex best practices in mind, they were observing issues with the accuracy of their application, especially with the initial member ID capture – an 11-digit alphanumeric sequence.

At this point, the customer reached out to Bespoken for help. The Bespoken Platform offered an easy way to exhaustively measure the performance of the system they had assembled and the Bespoken Delivery Team was engaged to analyze and re-train the system across a number of components to identify an approach to provide improved performance and, ultimately, the highest customer satisfaction.

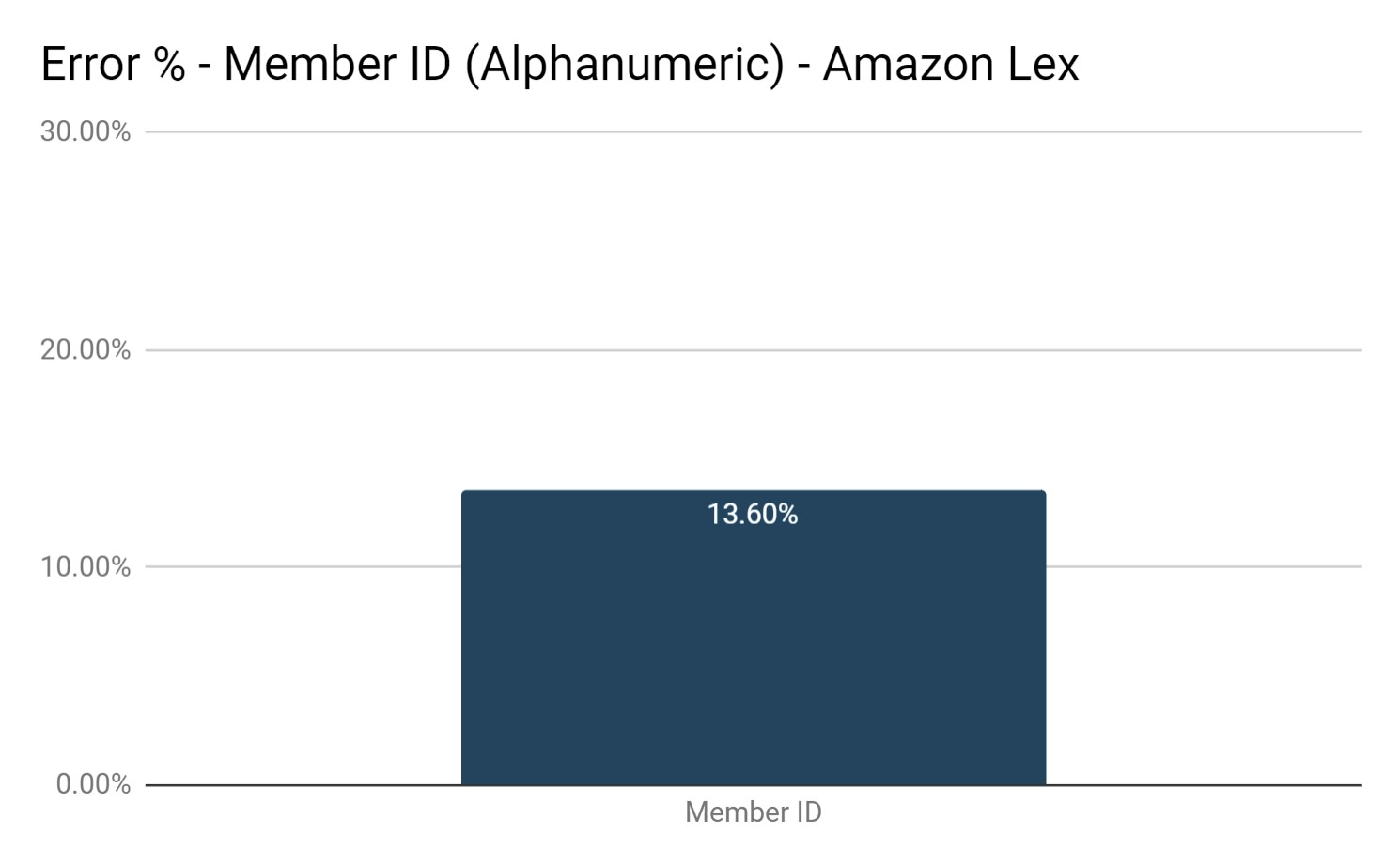

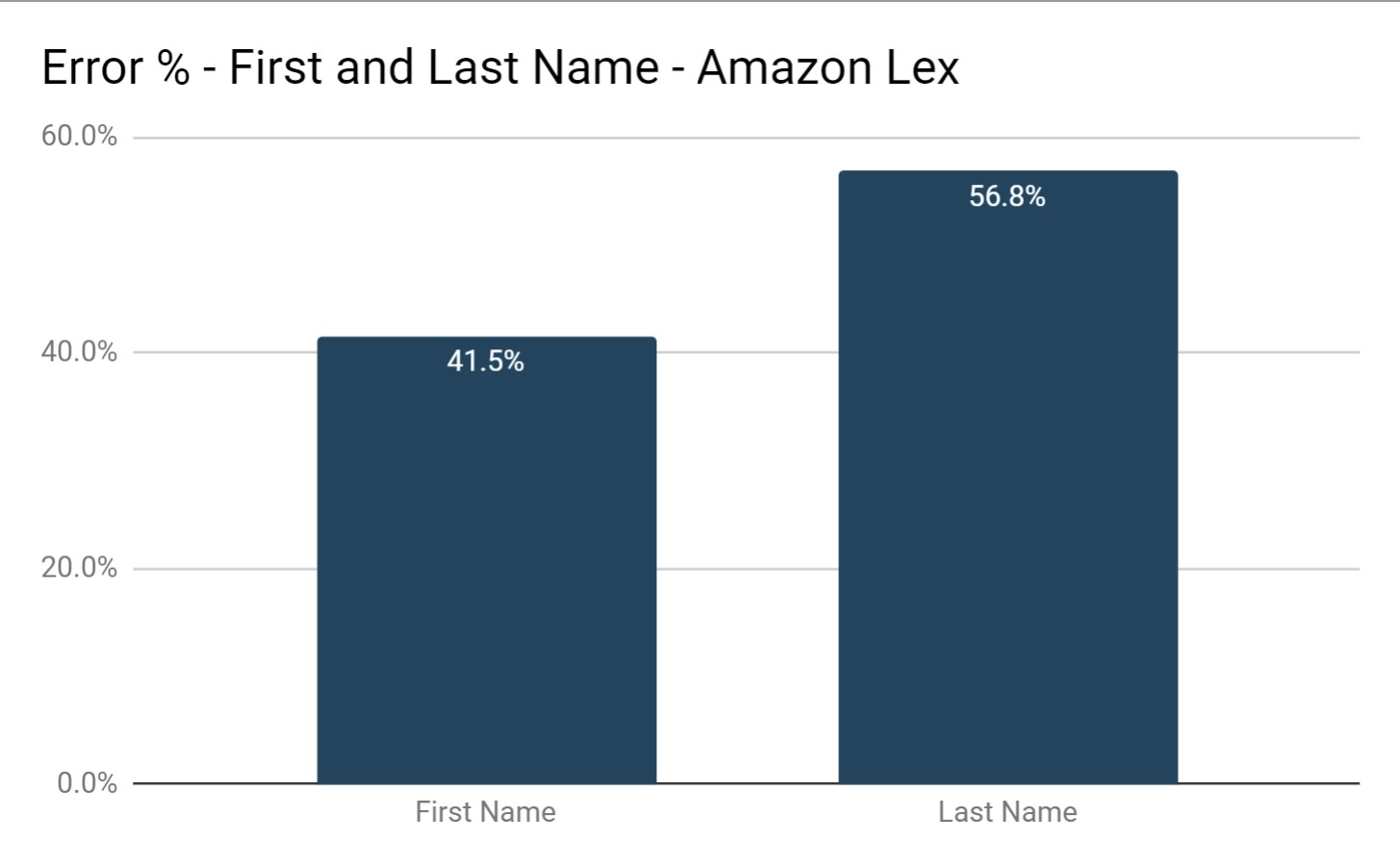

The Benchmark

Bespoken first measured the performance of Amazon Lex for member IDs (an alphanumeric sequence), first names, and last names. Here are the results:

These are very high error rates! Especially on the first name and last name. This will almost certainly frustrate users and lead to more customers opting out of the automated flow and instead of talking to more expensive, and more time-constrained, human agents.

Bespoken engaged with the customer team to understand the specific context and constraints imposed on their application by legal and information security requirements, which turned out to be non-negotiable. This inspired a joint effort to train and tune the use of Lex for better performance, leading to significant improvements:

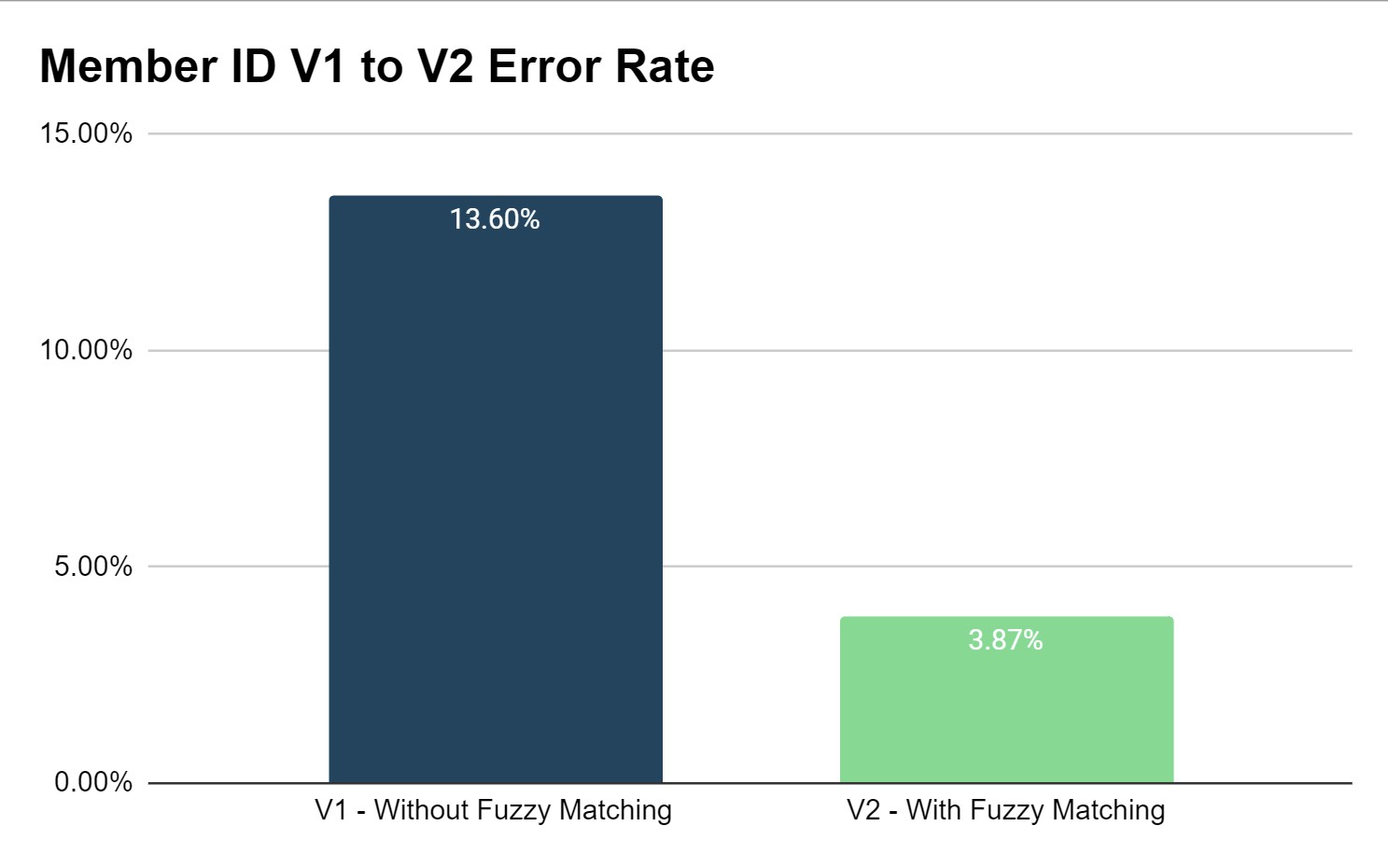

Bespoken applied its deep industry experience with natural language and speech recognition to implement a fuzzy search algorithm to “repair” misunderstood Member IDs. This made a huge improvement in performance – reducing errors by more than 71%. This illustrates the immense benefit that comes from testing and training natural language systems.

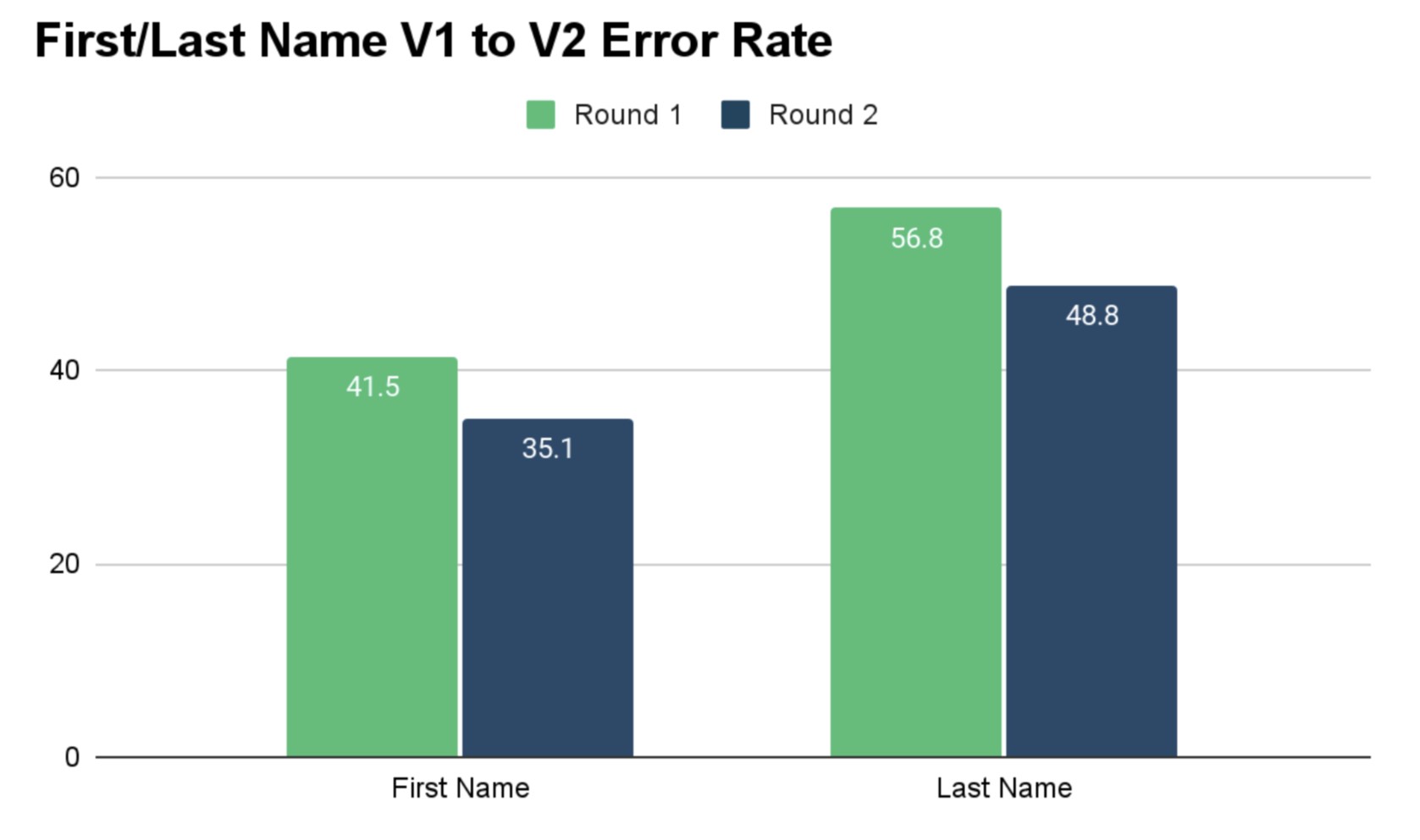

Regarding first and last name recognition, comparatively less improvement resulted from this tuning effort:

Even though at this step in the authentication process we “know” what the user’s name is (because the application has done a lookup to a user database with the member ID), we are not able to leverage that contextual data with Amazon Lex to improve performance. Bespoken confirmed that this is a significant limitation to Amazon Lex that hamstrings our customer for their specific use-case. This is also an illustration of one of Bespoken’s NL implementation principles:

There is not “one ring to rule them all” when it comes to ASR/NLU technology.

Instead, it is about picking the right tool for each job, then training it to maximum effectiveness. This principle is derived from more and more practitioner experience that Bespoken gets naturally exposed to: always be open to expanding to an architecture that uses the best conversational AI technology for each turn in the conversation.

Microsoft Azure Comes To The Rescue (Sort Of)

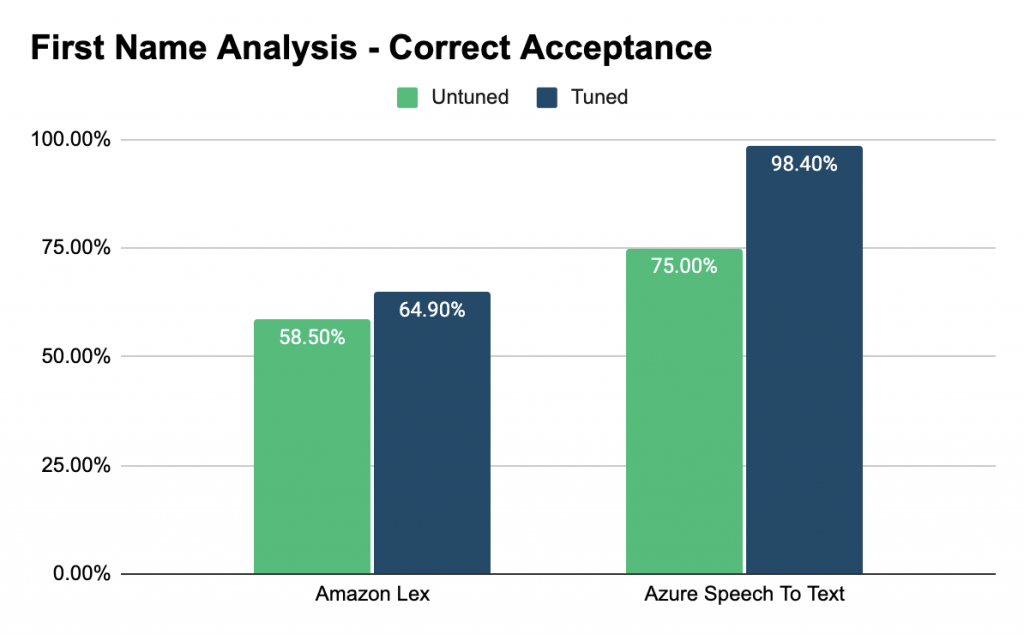

In line with this guidance on hybrid, best-of-breed solutions being the most effective for Conversational AI, Bespoken’s Delivery Team took the use case into a testing lab and converted part of it to run on an IVR runtime component called the Bespoken Orchestrator. This approach migrates to a hybrid architecture approach that enables easy sampling of results across different conversational AI platforms. Once ported onto the Orchestrator API, Bespoken could easily experiment and measure Microsoft Azure Speech for names:

These are AMAZING improvements – a reduction in errors of more than 95%!

Consider how it is accomplished: we “feed” the expected name for the user (taken from our Member ID lookup) to the ASR as a recognition hint. This sort of “just-in-time” tuning can do wonders for ASR systems – and Bespoken regularly sees just how beneficial it can be in Conversational IVR accuracy work. It also highlights how ASR performance is not just about who has the best model – it is about the features of the platform that surround this model. In this case, knowledge of the ability to send recognition hints to Azure (and not Amazon Lex) was very liberating.

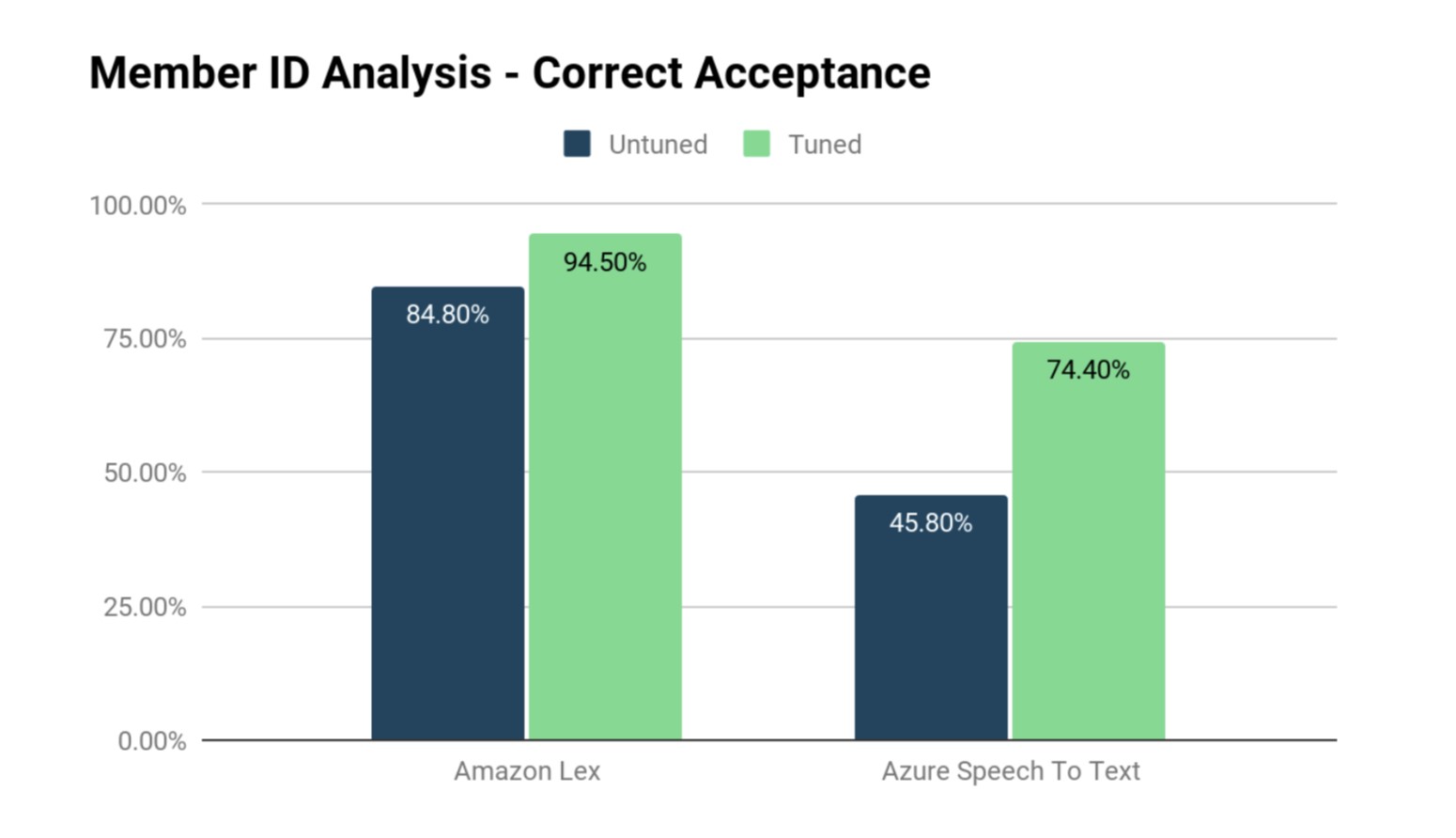

Buoyed by these results, Bespoken next looked at how Azure does with the Member IDs:

Not very good at all, especially compared to Lex. So, it appears that for filling the Member ID slot, Amazon Lex is a better choice, while for First Name and Last Name, Azure is.

One Last Test

At this point, the customer and Bespoken agreed there is a solid approach for this application. However, Bespoken wanted to keep digging and try one more thing – what would happen if a grammar-based model was employed to capture Member ID?

After all, the Member ID, in this case, is tightly prescribed: three letters, followed by six digits. This fits perfectly with a grammar-driven ASR, such as offered by IBM Watson. Though grammars may seem a bit outmoded compared to the relatively new, deep-learning-based models, they do have their advantages. The new deep-learning-based models excel at transcription – you can talk to them about anything, and they are likely to understand you. But grammars excel for more narrow domains, and they are still often the correct choice for use-cases where what the user can say is highly constrained.

The Bespoken Orchestrator makes experiments such as this easy. Member ID recognition with Watson was an easy experiment to try, and happily, it improved to more than 90% before applying the fuzzy search algorithm. With all that in place, accuracy exceeded 95%. These are stellar results!

This also demonstrates another key point – even with just a single vendor, the application may still end up using multiple models available from that vendor. So even using the same technical componentry, we may end up using completely different models, which require separate training and monitoring regimens.

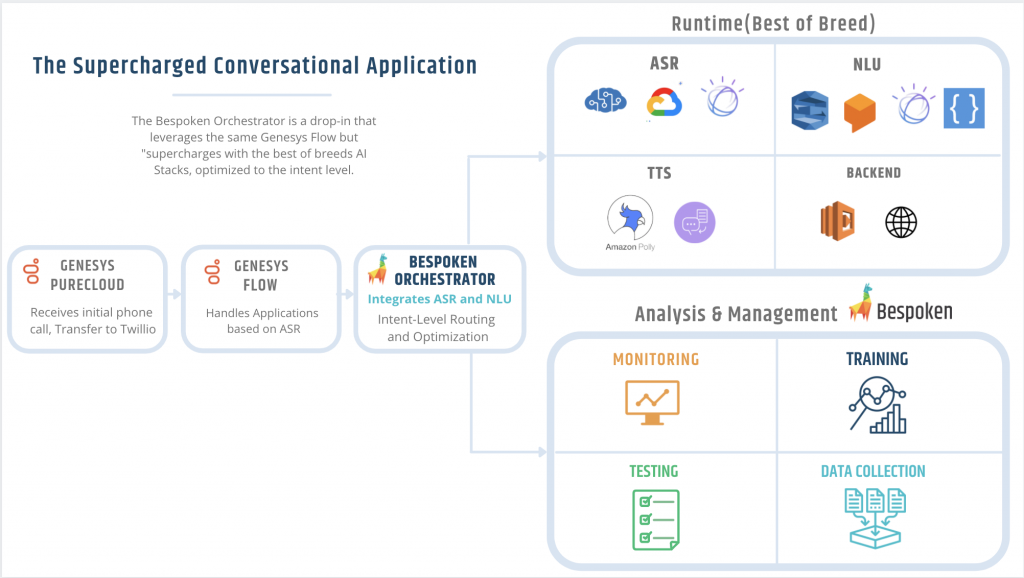

The Supercharged Architecture

The next step is bringing these remarkable testing results out of the Bespoken Delivery Team’s testing lab and making them available for real users, ideally with a minimum of re-development by the customer. This is where the Bespoken Orchestrator comes in – using it we can just “drop in” new ASR/NLU components and models.

The original Genesys flow has not changed at all, nor has our backend code or NLU.

So what has changed? The ASR engine we are using for the first name and last name use-cases. This has no impact at all on the implementation code of the customer but has a huge effect on the accuracy of the system and customer experience.

Along with the impact on accuracy, we have also put into place our industry-leading testing, training, and monitoring platform. With it, we ensure the system is working correctly, accurately, and is always available. What is more, we assist with the ongoing training and optimization of the system, by keeping a watchful eye over the interactions real users’ are having.

All of this ensures the customer system is working now and going forward. And as it evolves with new features, it is continuously optimized to deliver the best possible user experience. Thanks to this, we achieve what we consider to be the four key qualities of conversational systems:

- Trainable – our system is easy to train and optimize over time

- Flexible – it is easy to add and replace components from our architecture – this is critical to take advantage of all the incredible innovation happening in the Conversational AI space.

- Contextual – we are able to select the best approach down to the intent and even slot-level. This allows us to ensure every intent is performing at its very best and the entire user experience is superb.

The Return on Investment

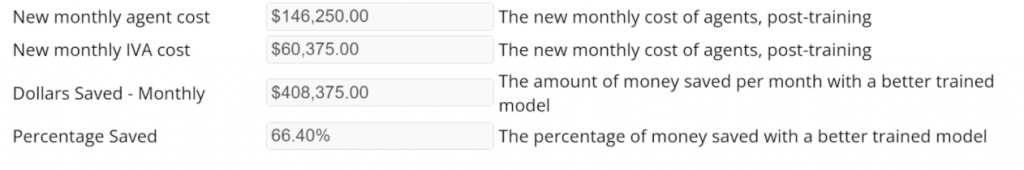

The ROI for our customer was immense. Without sharing their specific numbers, we can use our handy ROI calculator to quantify the benefit of the improvements we made. Let’s say this is our starting point:

- 5,000 calls per day

- 5 interactions per call

- Initial accuracy rate of 62% (blended rate across key data collection points)

If we plug these numbers, along with our new accuracy rate (a blended 95%) in our ROI calculator, we get these results:

$408,000 per month! That is the incredible impact that accuracy can have on the contact center. You can leverage our calculator to see how these numbers will work out for your own use-case and transactional volume.

Key Takeaways

Boiling it all down, here is what this example of applying Bespoken demonstrates:

- Accuracy Matters – it drives real business results

- A new class of Hybrid AI Architectures for Conversational IVRs has been shown to reduce risks and unlock the best execution for customer interactions

- Bespoken’s Orchestrator and expert advice is driving upgrades to a Hybrid AI Architecture

- Bespoken can help you accurately measure the ROI of your improvement in customer satisfaction

Reach out to contact@bespoken.io to find out more about how these improvements can apply to your Conversational IVR.