There are frequent discussions in voice technology circles about voice app performance. The definition of performance in these conversations usually drifts toward voice user experience – how effective is the app at fulfilling a user’s intent and the rate of re-engagement or retention. These are all important metrics.

However, we hear far less about traditional measures of application performance such as availability, error rates, average response time, request rates, and other key metrics. Neglecting these also has a substantial negative impact in voice user experience and engagement in both the short and long-term.

The Elements of Voice User Experience

When you consider user experience for an Alexa skill or Google Assistant app, traditional performance metrics remain relevant for obvious reasons. A user conversation cannot be completed if your Assistant voice app is not available. Nor can an intent be fulfilled if your Alexa skill keeps throwing errors and frustrating users. Delays in voice app response lead to abandoned sessions. I could go on – the problem scenarios are numerous.

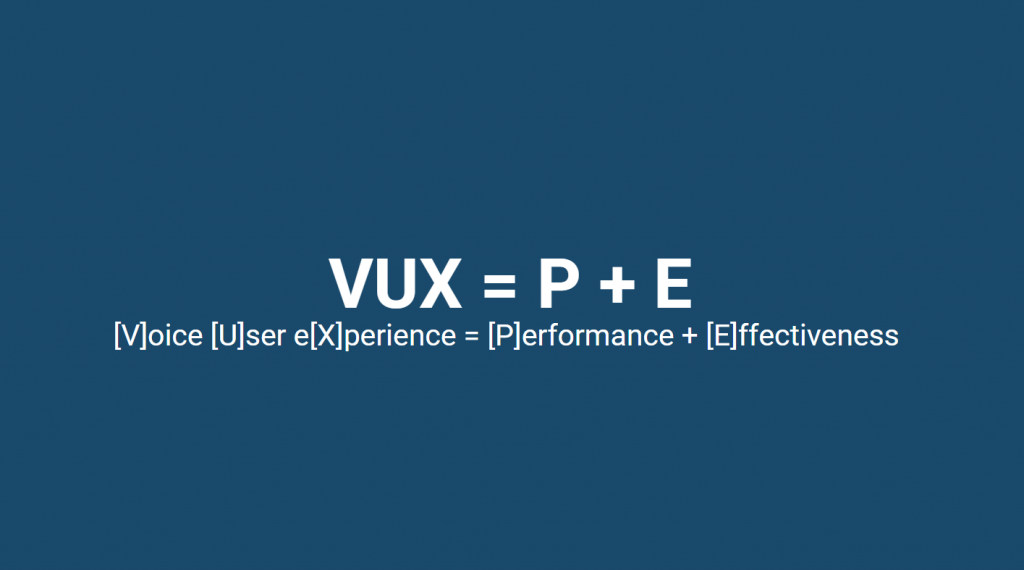

Voice User Experience = Performance + Effectiveness

Voice user experience is impacted by both the performance and effectiveness of a voice app. It is easier for outside observers to understand the effectiveness and it often leads to interesting discussions. This is where analytics shine.

How many unique users? How many interactions? What was the average session length? What is your retention? How many positive reviews have you received? How are these metrics trending over time?

Effectiveness is driven by the voice app design, user flow, presence of appropriate features to fulfill user intents and so on. However, none of these factors matter if voice app performance is poor. If your analytics show average session length declining, it could be a flaw in your design and user interaction model. It could also be caused by deteriorating app response times.

Unique user declines could be the result of reduced marketing or point to a feature deficit that undermines repeat usage. It could also be driven by lack of app availability or increasing invocation error rates.

Like it or Not Performance Comes First

Depending on your background you may prefer to work on effectiveness or performance challenges. However, performance ultimately comes first in user experience.

Performance dictates whether a session will be available via tangible elements, such as wait time and the likelihood of a request receiving a proper response. Unfortunately, voice assistant platforms do not provide robust monitoring, alerting, and diagnostic tools to help developers. That is the gap that Bespoken addresses today. Read more about our approach to diagnostics here.

Some people have asked me whether performance is less of an issue now that we are working in serverless environments. Because serverless is designed for scalability and agility, some traditional performance metrics are moot points and are not relevant in voice.

The short answer – look at your logs. You will see the same issues we have become accustomed to in mobile, desktop, and server-based apps. And, you will start to see some patterns that are unrecognizable from app performance in those more traditional computing environments.

The Need for Continuous Testing to Avoid the Error Spike

One of those differences related to voice app performance stems from the dynamic nature of the artificial intelligence (AI) governing automatic speech recognition (ASR) and natural language understanding (NLU).

Right off the bat, an issue that developers are sure to face is that ASR and NLU are imperfect – from an episode of the O’Reilly Design Podcast, UX expert Cathy Pearl states:

“Another thing that’s really important to understand about voice is that speech recognition is not perfect. Yes, it’s way, way, way, way better than it used to be, but it still makes a lot of mistakes. You have to build a graceful error recovery into every voice system no matter what. I don’t think, personally, that it will ever be a 100%. Accurate human speech recognition is certainly not 100% accurate. You have to spend a lot of time thinking about your error recovery.”

What’s more, though the AI platforms are imperfect, one of their selling features is that they will improve over time. It is constantly learning, and therefore steadily getting better at recognizing requests, interpreting speech, and understanding intent. But that means it is also changing. The group product manager for Google Assistant, Brad Abrams, recently commented in a podcast interview that the AI is updated a couple of times per week. We know from experience that updates are made regularly to Alexa as well.

This continuous updating sounds great and in many cases delivers exactly what it promises – better AI performance. However, better overall AI performance doesn’t necessarily mean better performance related to your voice app.

The change could result in an improvement, could not affect, or it could make things worse. All changes run the risk of causing regression errors. What if someone updated the OS version of the server running your app overnight. Might this be a cause for concern? We have talked to many developers that have experienced error spikes related to changes or events on the underlying platforms. Errors suddenly start piling up that were not visible the previous day.

In the old app model, we were accustomed to a stable environment. We also knew when the environment was changing because we controlled it. The environment had a specific and stable OS version, processing power capacity, memory, bandwidth access, and other defined capabilities.

The serverless model and the presence of continuously updating AI means we do not have that stability. It means that testing is not just something you do before launch. You must do it continuously.

As an industry, developers have embraced continuous delivery and continuous integration for mobile and web apps. These approaches have revolutionized application development. AI and voice assistants introduce the need for continuous testing. This is a new form of app testing that validates performance hasn’t been negatively impacted by unscheduled changes to the AI. This is a new concept related to app performance and is essential to the voice app user experience equation.

Voice App Operations, Maintenance, and Management

When we recognize that app performance is important to voice app user experience and that AI-based systems are different, another question arises. How are we overcoming these challenges, quickly, comprehensively, and repeatably?

In the past, we had several software packages specifically related to performance management, monitoring, logging, and alerting. However, these tools were all created for the old model where we had known computing environments that were stable.

The serverless and AI model for computing environments introduce new variables that the old applications do not address. That is why Bespoken has developed a set of automated testing and monitoring tools built exclusively for voice apps and AI environments.

We developed these tools to provide visibility into performance, specifically our Usability Performance Testing as a way to measure how well the automatic speech recognition (ASR) and natural language understanding (NLU) are working for your voice app. You can get started with Usability Performance Testing here.

When Your Voice App Matters, Performance Is Essential

Amazon Alexa skills and Google Assistant apps are just the start of a new model for app delivery based on AI and dynamic computing environments. App performance metrics are essential to user experience and require new tools to accommodate our new computing architectures. The question for developers is this:

Are you confident your voice app is working right now?

Your testing from last week may no longer be a valid indicator of expected app performance today. If you are a hobbyist, app performance may not be that important. But any voice app that people really care about requires continuous testing and monitoring.

We are starting to see voice apps conduct financial transactions, support personal health, deliver entertainment, and enable brand engagement. The organizations behind these apps care about voice user experience and that starts with performance. And you should too.

Learn more about testing and monitoring Alexa skills here, and subscribe here to get updates as we launch new features to guarantee your voice app’s performance.

Additionally, sign up for one of our webinars as we do a deep dive on skill testing and automation. These are essential elements to achieve high performance and quality. You can register here!