The world of voice-first is full of acronyms and complex concepts, making it difficult to follow its constant and rapid evolution. Anyone who is interested in this technology faces the challenge of understanding intricate concepts and managing a steep learning curve.

To help people who are unfamiliar with the voice world we’ve created this Voice First cheat-sheet, enabling you to navigate the world of voice interaction easily, and conquer the learning curve with elegance and speed.

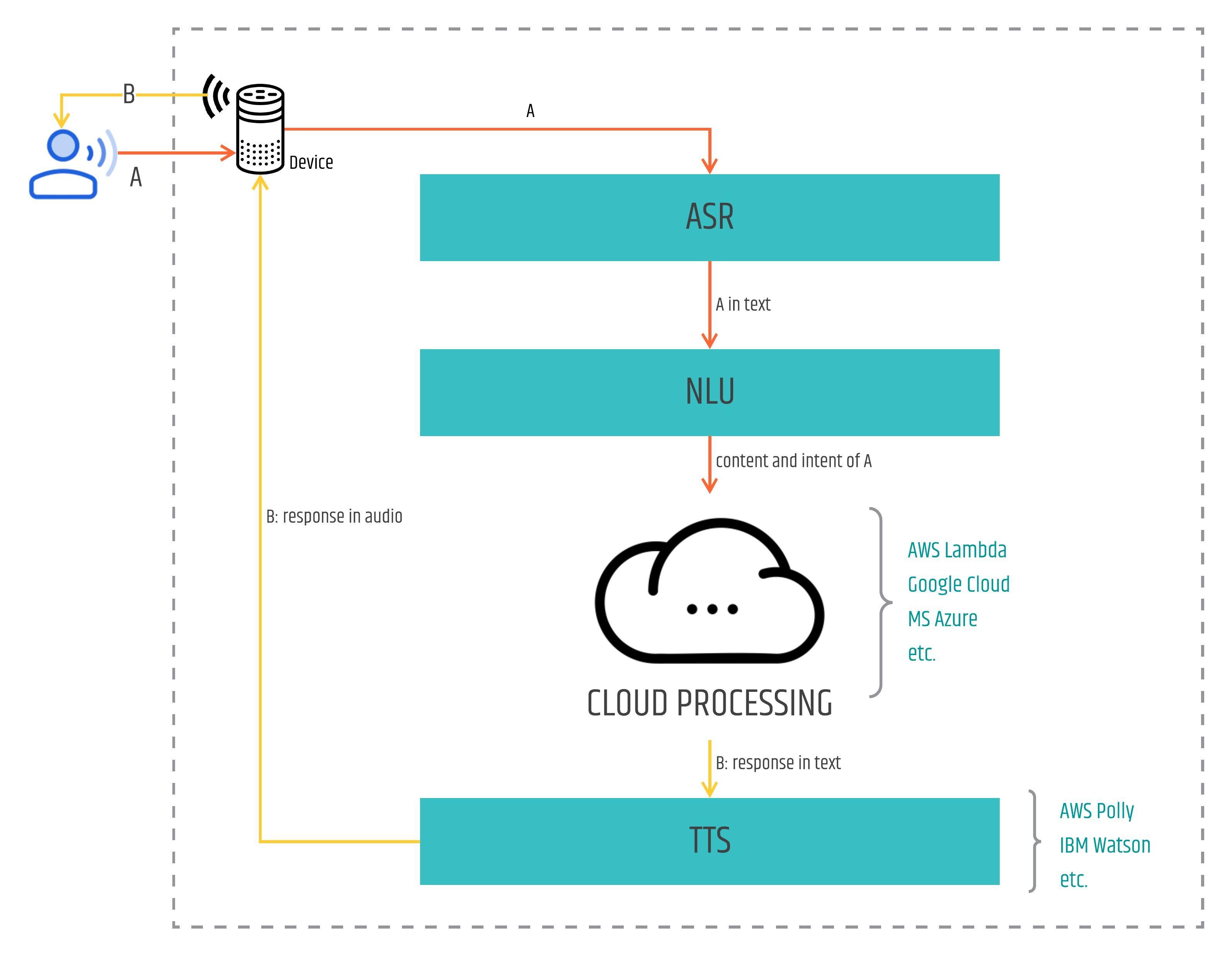

Let’s start by checking this diagram, where you can see the context of voice first.

Devices

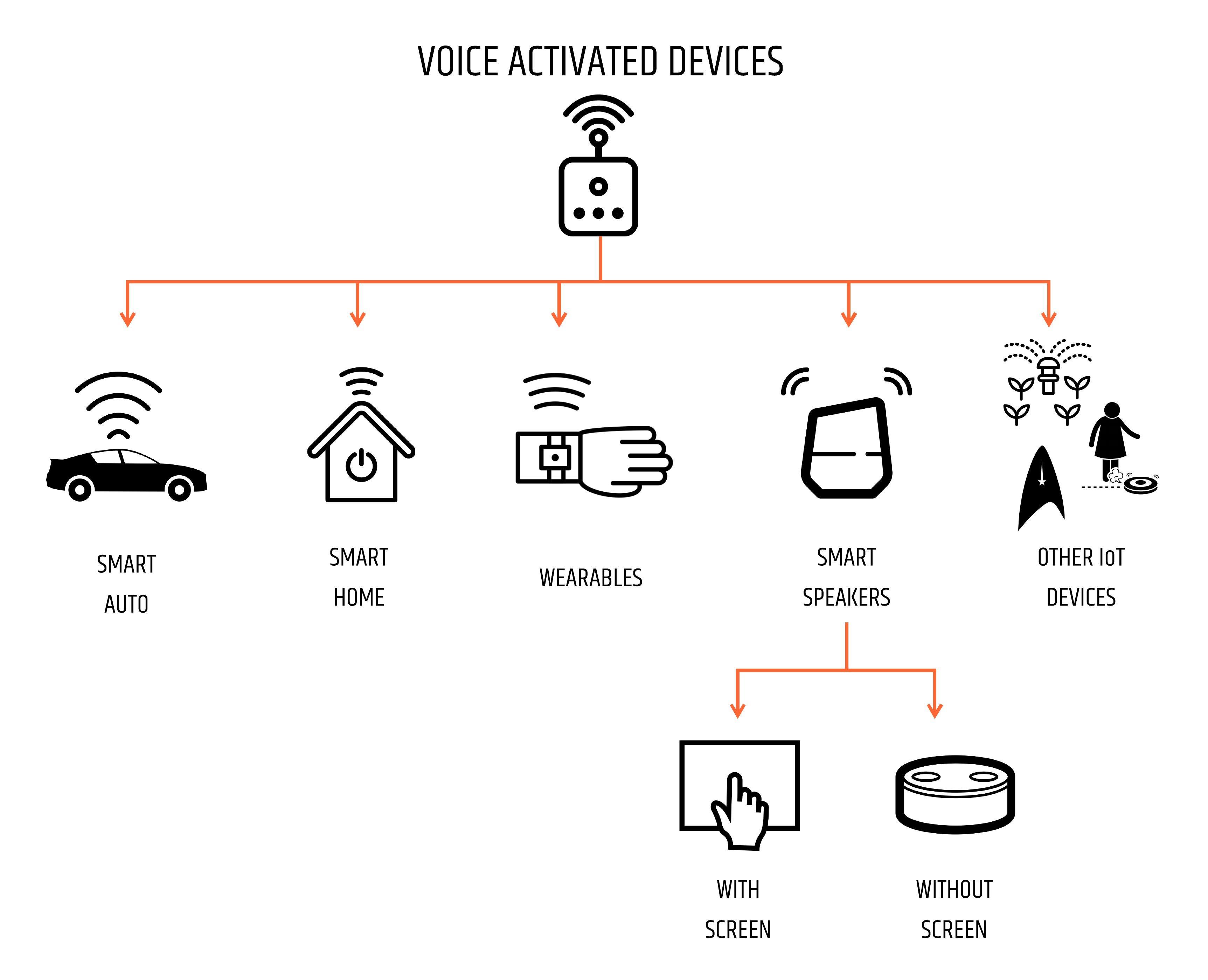

Every human-machine spoken interaction begins and ends on a device. The recent boom in this technology has put devices of all shapes, sizes, and prices within our reach. From smart speakers like Google Home to glasses with which you can interact with Alexa, everywhere we look the Internet of Things is getting connected to voice.

With the arrival of devices with a screen, it is possible to have a multimodal experience, where in addition to natural language interaction, images, text, and videos are added as part of the voice app response.

App developers are now adjusting their design paradigms, moving from the traditional [Visual] User Interface (UI) to a Voice User Interface (VUI) – and the same goes for Product Managers, who are now creating Voice User Experiences (VUX), instead of just User Experiences.

Bespoken has created a set of tools that allow developers to access the multimodal elements present in the enriched voice interactions, thus enabling testing automation and ensuring the quality in which the information is presented to the users. For more information on how this works, click here.

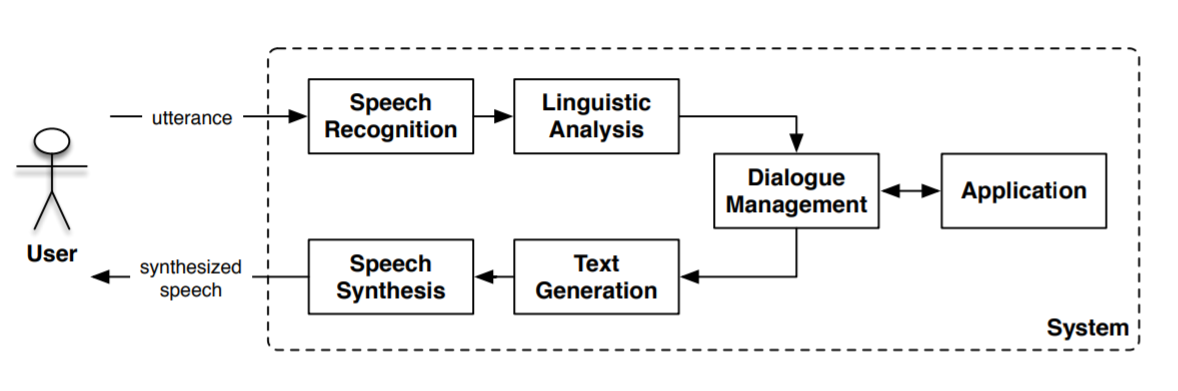

ASR

Automatic Speech Recognition is the process that converts what has been said into text. This process is also known as STT (Speech-To-Text). ASR is one of the pillars of voice interactions since once the user’s request has been converted into text it is possible for a computer to determine what the user has requested, and therefore, to execute the correct computer program that satisfies the user’s need.

Companies like Nuance, IBM, Google, and Amazon have platforms to perform ASR or have integrated them into their voice-first devices like Amazon Alexa or Google Home.

Automated testing with Bespokenis the best way to make sure ASR is working correctly for your voice app. Verify your intents are correctly hit by creating simple yet comprehensive end-to-end test scripts. Read hereto learn more.

NLU

The role of Natural Language Understanding is to determine the user’s intention when issuing an oral request. If ASR is the literal transcript of what was said, NLU finds the meaning and purpose of the request made.

One of the most essential tasks NLU performs is to understand the context of what has been said to discover the correct intention requested by the speaker. Let’s look at this sample interaction:

- What is the weather forecast for Barcelona on Friday?

- Moderate showers are expected in the afternoon.

- Then, book a flight at 11.

Thanks to the NLU it would be possible to execute the last petition successfully since it is determined that the destination city is Barcelona and that 11 is 11 am on Friday.

Also, the NLU and Dialog Manager work together to extract additional information from the user utterances, like parameters in order to properly execute the user’s requests.

Bespoken end-to-end testing scripts allow you to quickly verify your Dialog Interface for your Alexa skills. Take a look at how we tested one of Amazon’s samples and tutorials with it – College Finder sample app.

Cloud Processing

Here lies the code that handles the user’s requests. When the Dialog Manager / NLU has identified the user’s intent, the related code is executed, and a response is generated for the user.

To minimize response times it is very common to store and execute the code of voice apps in the cloud. Amazon Alexa encourages skills developers to use AWS Lambda, and Google Actions code is usually stored in Google Functions. Though these platforms offer great performance as well as flexible pricing (developers only pay for usage), they are not mandatory. Voice app code can also be exposed as web services from within your servers.

You can rapidly accelerate your development cycle by running your voice app code locally, avoiding time-consuming deployments to the cloud while you are coding and debugging/testing easily with Bespoken CLI. Read here to learn how to get started.

TTS

When the voice app code has generated a response, usually in text, it should be transmitted to the user in audio. The technology in charge of doing this transformation is called Text-To-Speech or Speech Synthesis. Since user requests can be made in multiple languages, the Google Assistant or Amazon Alexa platforms have different voices that allow reply back in the same language and possibly with the same accent to the user.

Where in the Voice First World is the AI?

The NLU, and in general the Natural Language Processing (or NLP, a discipline that encompasses the NLU and incorporates others such as the NLG – Natural Language Generation), is a type of AI.

These components use AI techniques such as Machine Learning or Neural Networks. Machine Learning is used to perform a job based on a series of examples. This is why it is said that Amazon Alexa or Google Assistant are always learning; they use all the utterances the users issue as input to their learning activities. Likewise, neural networks allow systems to perform activities such as skill discovery or contextual slot carryover, that is, to identify and obtain parameters of our conversations within the context of a conversation.

IoT and Voice First

All the components reviewed here can be packaged in small kits, and incorporated into almost any IoT device or experience. Miniaturization of hardware and cloud computing allow these things to be always connected to the Internet, delivering on the promise of IoT with user-friendly voice interaction. Through voice interaction technology the Internet of Things opens up to a world of new use cases and experiences, enabling smart devices to understand human needs and deliver what users want, regardless of location or context.

According to Gartner Research, there will be an estimated 20.4 billion IoT devices deployed by the year 2020 and voice will be the primary mode of interaction of this world of connected ‘everything’. If you’re working in the area of connected products, your voice strategy can’t wait any longer – and without testing automation, your team will struggle to meet ship dates and deliver optimal voice experiences that meet users’ needs and deliver delightful brand experiences.

Bespoken is here to make sense of this new voice first world, and our world-leading testing automation solutions and services ensure your voice-first applications ship on time and deliver delightful user experiences and the outstanding app ratings your team deserves. Contact us today to learn more – we’re here to help!

contact@bespoken.io

(*) Icons attributions (all from the Noun Project):

- Amazon Echo by Ben Davis

- Cloud processing by Cezary Lopacinski

- wifi by Peter Borges

- Smart Car by Trevor Dsouza

- Smart Home by Manop Leklai

- Smartwatch by DTDesign

- Smart Speaker by mgpjm

- starfleet by Harpal Singh

- Robot vacuum cleaner by Gan Khoon Lay

- spray sprinkler by ProSymbols

- screen by Dinosoft Labs

- Amazon Dot by Nick Bluth